"Uber engineers have updated the CacheFront architecture to serve over 150 million reads per second while ensuring stronger consistency. The update addresses stale reads in latency-sensitive services and supports growing demand by introducing a new write-through consistency protocol, closer coordination with Docstore, and improvements to Uber's Flux streaming system. In the earlier CacheFront design, a high throughput of 40 million reads per second was achieved by deduplicating requests and caching frequently accessed keys close to application services."

"While effective for scalability, this model lacked robust end-to-end consistency, making it insufficient for workloads requiring the latest data. Cache invalidations relied on time-to-live (TTL) and change data capture (CDC), which introduced eventual consistency and delayed visibility of updates. This also created specific issues: in read-own-writes inconsistency, a row that is read, cached, and then updated might continue serving stale values until invalidated or expired."

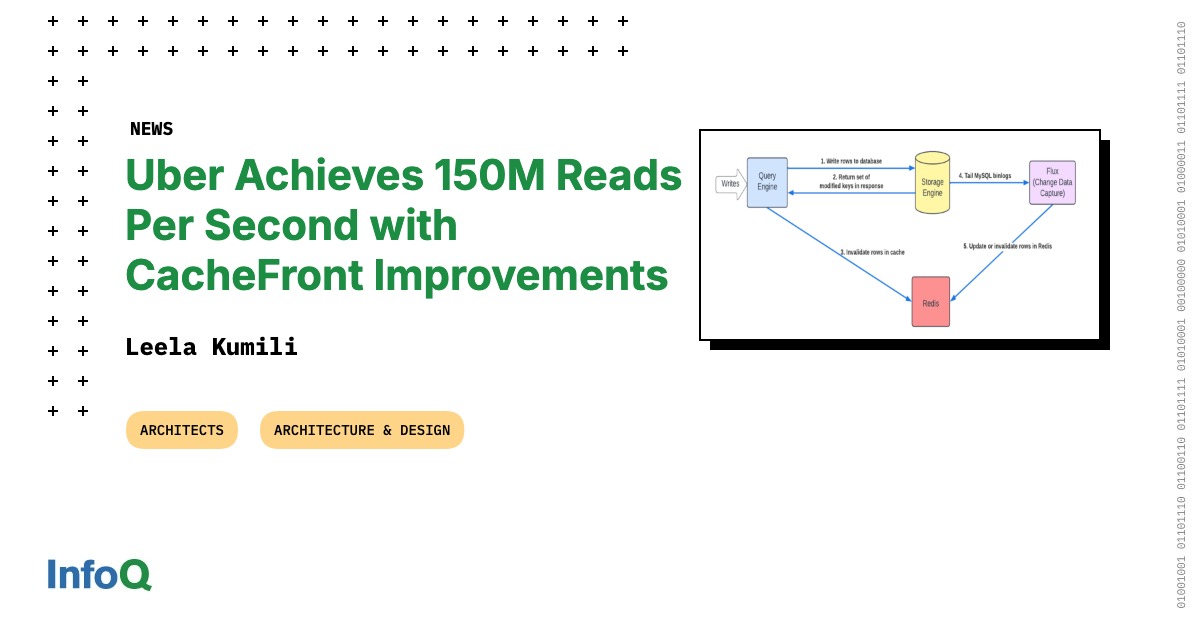

"The new implementation introduces a write-through consistency protocol along with a deduplication layer positioned between the query engine and Flux, Uber's streaming update system. Each CacheFront node now validates data freshness with Docstore before serving responses. The storage engine layer includes tombstone markers for deleted rows and strictly monotonic timestamps for MySQL session allocation. These mechanisms allow the system to efficiently identify and read back all modified keys, including deletes, just before commit, ensuring that no stale data is served even under high load."

CacheFront has been updated to handle over 150 million reads per second while providing stronger consistency for latency-sensitive services. The previous design achieved about 40 million reads per second by deduplicating requests and caching hot keys near services but relied on TTL and CDC-based invalidations that caused eventual consistency and stale reads. Read-own-writes and read-own-inserts inconsistencies could occur and negative caching produced incorrect misses. The new design adds a write-through consistency protocol, a deduplication layer between the query engine and Flux, per-node Docstore freshness validation, tombstone markers for deletions, and strictly monotonic MySQL timestamps to detect and read modified keys before commit.

Read at InfoQ

Unable to calculate read time

Collection

[

|

...

]