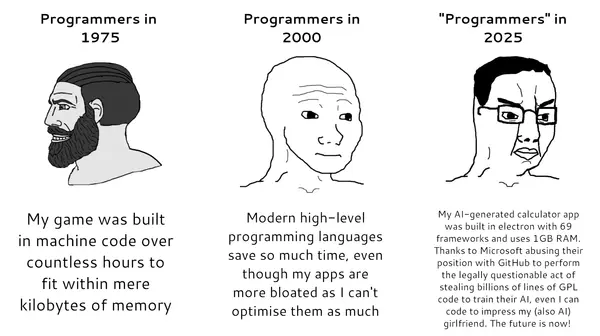

"Historically computers had much less computing power and memory. CPUs and memory were expensive. Programmers were much more constrained by the CPU speed and available memory. A lot of work had to be done in order to fit the program into these limited resources. It's no surprise that for 1970-80s era programs it was a normal thing to be written in very low-level languages, such as machine code or assembly as they give the programmers ultimate control over every byte and processor instruction used."

"Sometimes when you compare the system requirements of older and newer generation software, you become shocked. Just compare the system requirements of, let's say Windows 11 Calculator alone (let alone the full OS), and the whole Windows 95 OS! Windows 11 Calculator alone consumes over 30MiB of RAM (even this might be an underestimation because shared memory is not included), while Windows 95 could work even with 4MiB of RAM."

Computing resources became cheaper, enabling high-level languages and garbage-collected runtimes that trade memory and CPU for developer productivity, maintainability, and rapid prototyping. Many modern programs include large frameworks, abstractions, and shared libraries that increase resource usage compared with older low-level implementations. Some bloat is an explicit tradeoff that simplifies development and shortens time-to-market. Other bloat stems from unnecessary inefficiency, outdated designs, or careless dependencies and does harm. Performance-critical bottlenecks still receive focused optimization, while postponing optimization too long can create costly rewrites. Effective engineering balances resource efficiency with developer velocity and targets optimization where it yields meaningful benefit.

Read at WaspDev Blog

Unable to calculate read time

Collection

[

|

...

]