"A few years back, Google made waves when it claimed that some of its hardware had achieved quantum supremacy, performing operations that would be effectively impossible to simulate on a classical computer. That claim didn't hold up especially well, as mathematicians later developed methods to help classical computers catch up, leading the company to repeat the work on an improved processor."

"While this back-and-forth was unfolding, the field became less focused on quantum supremacy and more on two additional measures of success. The first is quantum utility, in which a quantum computer performs computations that are useful in some practical way. The second is quantum advantage, in which a quantum system completes calculations in a fraction of the time it would take a typical computer. (IBM and a startup called Pasqual have published a useful discussion about what would be required to verifiably demonstrate a quantum advantage.)"

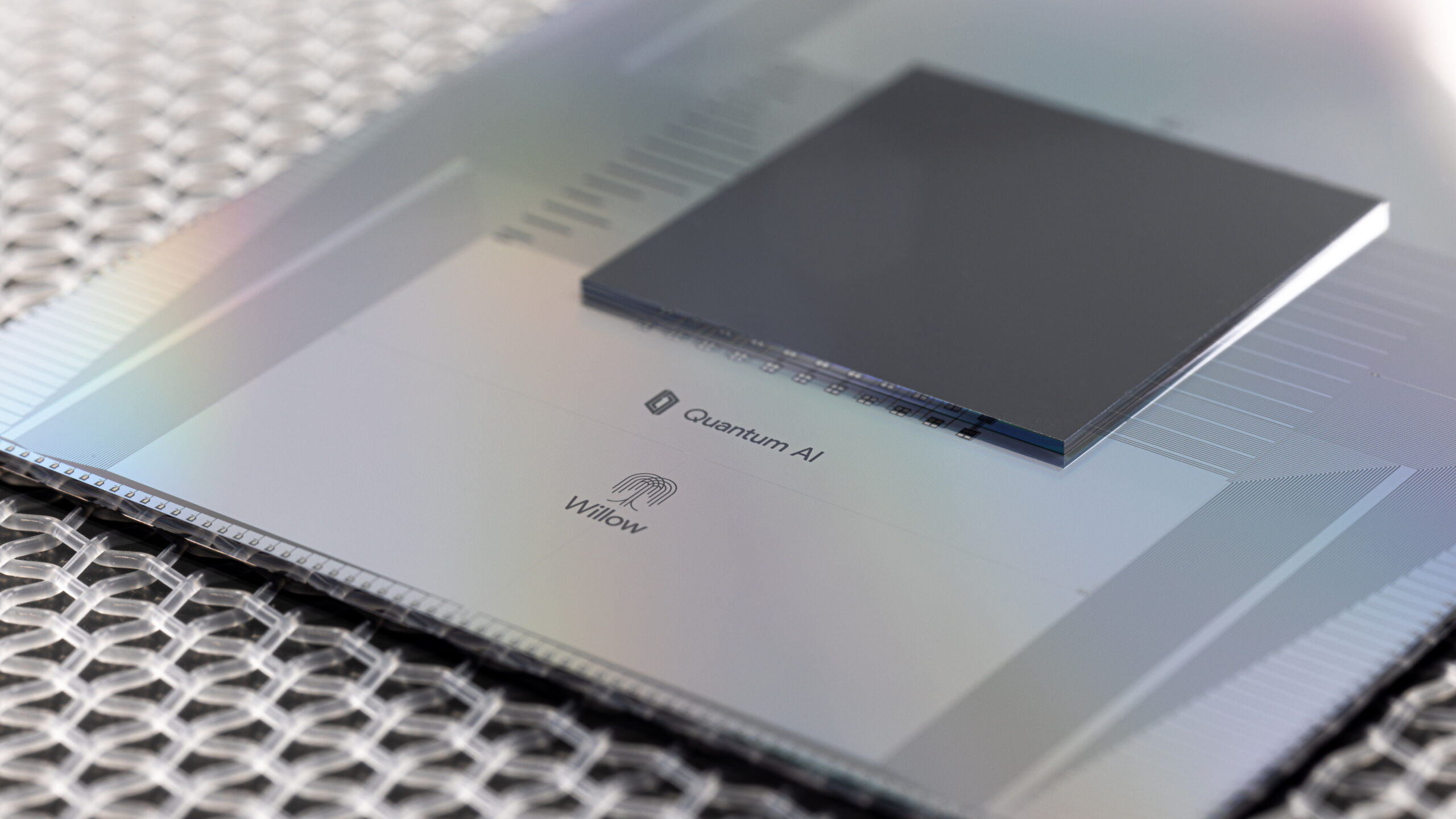

"Google's latest effort centers on something it's calling "quantum echoes." The approach could be described as a series of operations on the hardware qubits that make up its machine. These qubits hold a single bit of quantum information in a superposition between two values, with probabilities of finding the qubit in one value or the other when it's measured. Each qubit is entangled with its neighbors, allowing its probability to influence those of all the qubits around it."

Early quantum supremacy claims were challenged when improved classical algorithms narrowed the gap, prompting a shift toward quantum utility and quantum advantage. Quantum utility denotes practical, useful computations; quantum advantage denotes completing tasks much faster than typical classical machines. 'Quantum echoes' uses sequences of operations on hardware qubits, exploiting superposition and entanglement among neighboring qubits. Gates manipulate qubit probabilities; most devices use one- and two-qubit operations, and noise and control errors complicate scaling. Classical simulation of 'quantum echoes' takes roughly 13,000 times longer on a supercomputer, indicating a substantial classical computational cost.

Read at Ars Technica

Unable to calculate read time

Collection

[

|

...

]