"In the previous lesson, you learned how to turn text into embeddings - compact, high-dimensional vectors that capture semantic meaning. By computing cosine similarity between these vectors, you could find which sentences or paragraphs were most alike. That worked beautifully for a small handcrafted corpus of 30-40 paragraphs. But what if your dataset grows to millions of documents or billions of image embeddings? Suddenly, your brute-force search breaks down - and that's where Approximate Nearest Neighbor (ANN) methods come to the rescue."

"That's fine for 500 vectors, but catastrophic for 50 million. Let's do a quick back-of-the-envelope estimate: Even on modern hardware capable of tens of gigaflops (GFLOPS) per second, brute-force search over billions of vectors still incurs multi-second latency per query - before accounting for memory bandwidth limits - making indexing essential for production-scale retrieval. High-dimensional data introduces another subtle problem: distances begin to blur. In 384-dimensional space, most points are almost equally distant from each other. This phenomenon, cal"

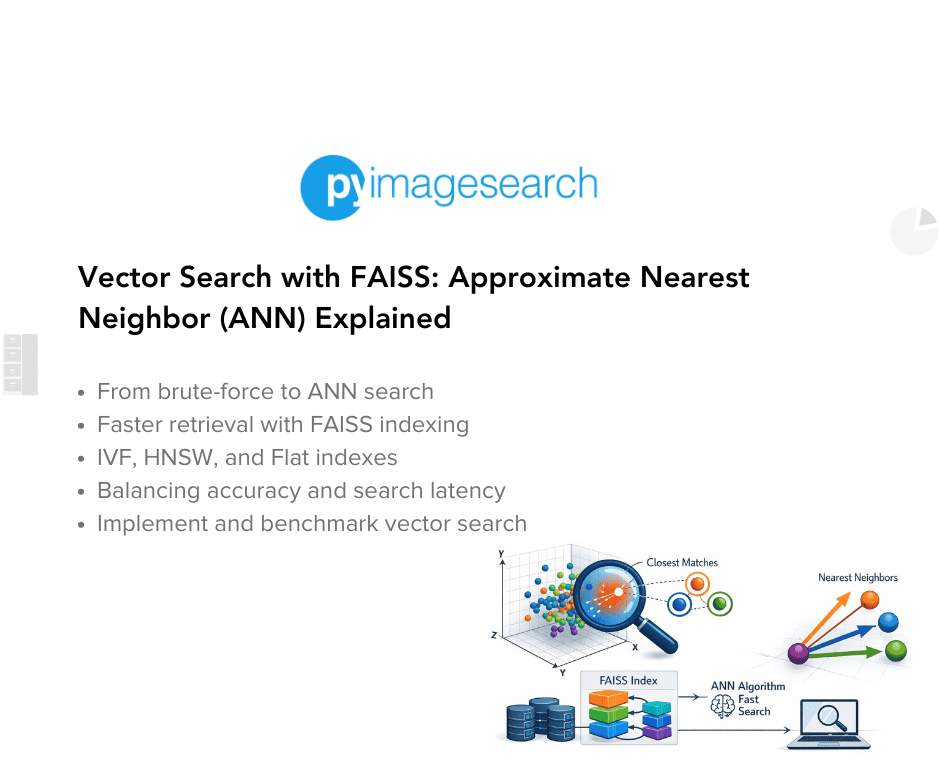

Embeddings are compact, high-dimensional vectors that capture semantic meaning and enable semantic search via cosine similarity. Brute-force search computes dot products between a query and every stored embedding, causing linear scaling with dataset size and prohibitive latency at millions or billions of vectors. Modern hardware can perform many FLOPS, but memory bandwidth and sheer volume still produce multi-second query times without indexing. High-dimensional spaces exacerbate retrieval because distances concentrate and discrimination degrades. Approximate Nearest Neighbor (ANN) algorithms and FAISS indexes like Flat, IVF, and HNSW provide efficient indexing strategies that trade minor recall loss for large latency reductions. Benchmarking reveals recall-versus-latency tradeoffs as datasets scale.

Read at PyImageSearch

Unable to calculate read time

Collection

[

|

...

]