"In the first two lessons of this series, we explored how modern attention mechanisms like Grouped Query Attention (GQA) and Multi-Head Latent Attention (MLA) can significantly reduce the memory footprint of key-value (KV) caches during inference. GQA introduced a clever way to share keys and values across query groups, striking a balance between expressiveness and efficiency. MLA took this further by learning a compact latent space for attention heads, enabling more scalable inference without sacrificing model quality."

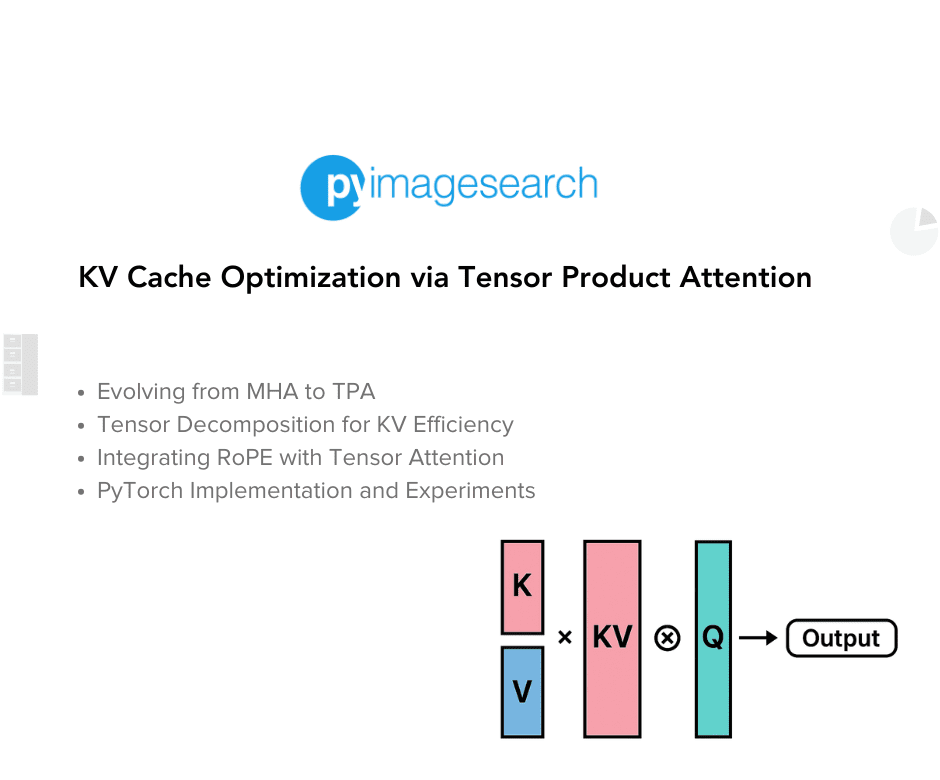

"Now, in this third installment, we dive into Tensor Product Attention (TPA) - a novel approach that reimagines the very structure of attention representations. TPA leverages tensor decompositions to factorize queries, keys, and values into low-rank contextual components, enabling a highly compact and expressive representation. This not only slashes KV cache size but also integrates seamlessly with Rotary Positional Embeddings (RoPE), preserving positional awareness."

Grouped Query Attention (GQA) reduces KV cache by sharing keys and values across query groups, balancing expressiveness and efficiency. Multi-Head Latent Attention (MLA) reduces cache further by learning a compact latent space for attention heads, enabling scalable inference without quality loss. Tensor Product Attention (TPA) factorizes queries, keys, and values using tensor decompositions into low-rank contextual components, producing highly compact yet expressive representations. TPA markedly lowers KV cache size while preserving positional awareness through integration with Rotary Positional Embeddings (RoPE). TPA's low-rank factors enable scalable, high-performance LLM inference with reduced memory footprint.

#tensor-product-attention #kv-cache-optimization #rotary-positional-embeddings-rope #tensor-decomposition

Read at PyImageSearch

Unable to calculate read time

Collection

[

|

...

]