"Transformer-based language models have long relied on Key-Value (KV) caching to accelerate autoregressive inference. By storing previously computed key and value tensors, models avoid redundant computation across decoding steps. However, as sequence lengths grow and model sizes scale, the memory footprint and compute cost of KV caches become increasingly prohibitive - especially in deployment scenarios that demand low latency and high throughput."

"Recent innovations, such as Multi-head Latent Attention (MLA), notably explored in DeepSeek-V2, offer a compelling alternative. Instead of caching full-resolution KV tensors for each attention head, MLA compresses them into a shared latent space using low-rank projections. This not only reduces memory usage but also enables more efficient attention computation without sacrificing model quality. Inspired by this paradigm, this post dives into the mechanics of KV cache optimization through MLA, unpacking its core components: low-rank KV projection, up-projection for decoding."

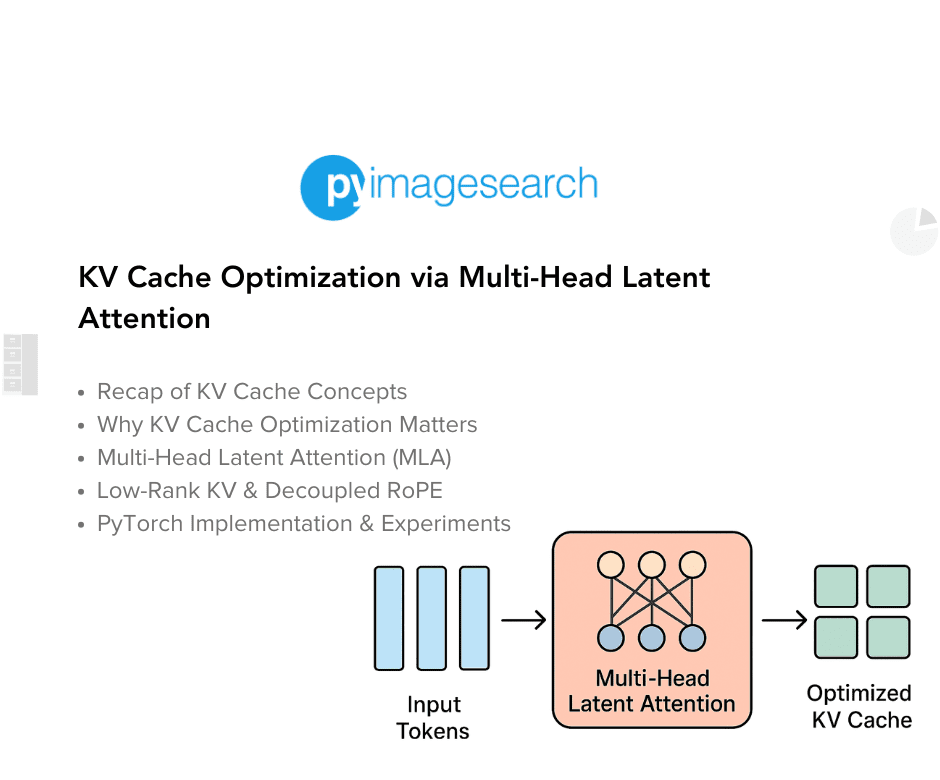

KV caching stores previously computed key and value tensors to avoid redundant computation during autoregressive decoding. Growing sequence lengths and larger model sizes cause KV caches to consume substantial memory and compute, which impedes low-latency, high-throughput deployment. Multi-head Latent Attention (MLA) compresses per-head KV tensors into a shared low-rank latent space via low-rank projections, reducing memory use and enabling more efficient attention computation without sacrificing model quality. Core components include low-rank KV projection, an up-projection step during decoding, and a RoPE variant that decouples positional encoding from head-specific KV storage. These techniques collectively yield a leaner, faster attention mechanism.

Read at PyImageSearch

Unable to calculate read time

Collection

[

|

...

]