"Imagine asking a friend to find any object in a picture simply by describing it. This is the promise of open-set object detection: the ability to spot and localize arbitrary objects (even ones never seen in training) by name or description. Unlike a closed-set detector trained on a fixed list of classes (say, "cat", "dog", "car"), an open-set detector can handle new categories on the fly, simply from language cues."

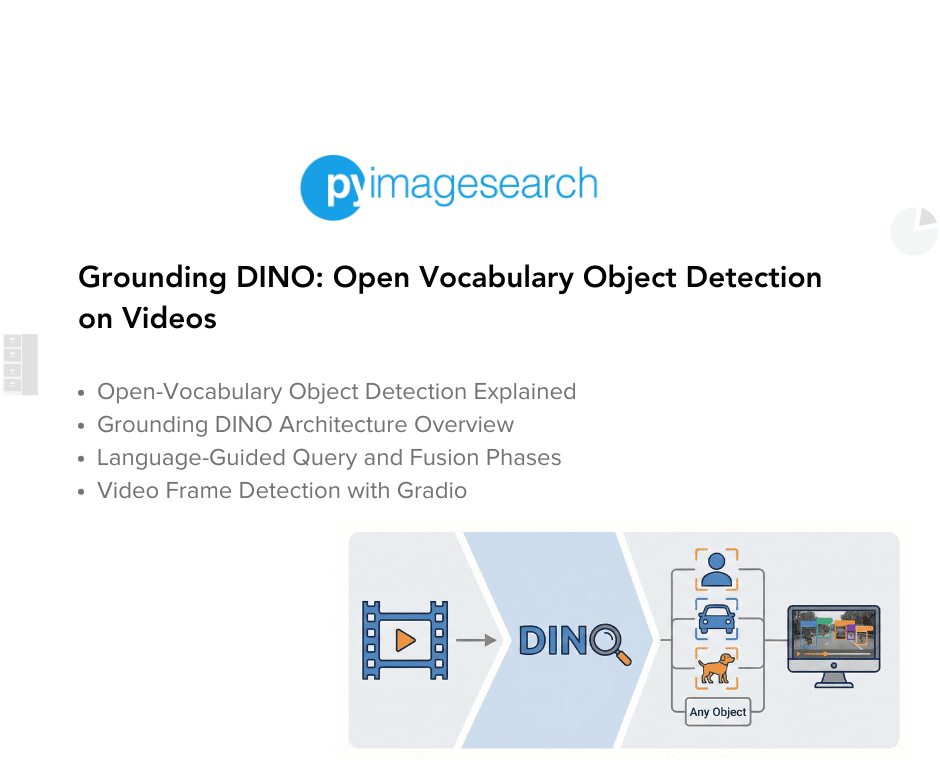

"Grounding DINO is a model that does exactly this: it "marries" a powerful vision detector (DINO) with language pre-training so it can understand text prompts. In practice, this means you can give Grounding DINO a sentence like "red bike under the tree" or a simple noun, and it will draw bounding boxes around those objects, even if they were not part of its original training labels."

"This makes the system hugely flexible: it can be used in robotics ("pick up the blue mug"), search ("find all photos containing butterflies"), and image editing - for example, combining grounding with a diffusion model lets users say "replace the white cat with a tiger" and have the system first detect the cat via language and then inpaint a tiger. Crucially, it allows models to generalize to novel categories via language-based grounding rather than requiring labeled training images for every class."

Open-set object detection enables systems to find and localize arbitrary objects by name or description, including categories unseen during training. Grounding DINO combines a DINO vision detector with language pretraining so the model understands text prompts and links them to image regions. The model scores region proposals against text embeddings so novel concepts can be detected without explicit class labels. Language-guided detection supports tasks such as robotic manipulation, image search, and editing workflows that first locate targets and then perform operations like inpainting. Combining grounding with diffusion models enables targeted replacements by detecting objects via language and then synthesizing edits. Language-based grounding reduces dependence on labeled training images for every class.

Read at PyImageSearch

Unable to calculate read time

Collection

[

|

...

]