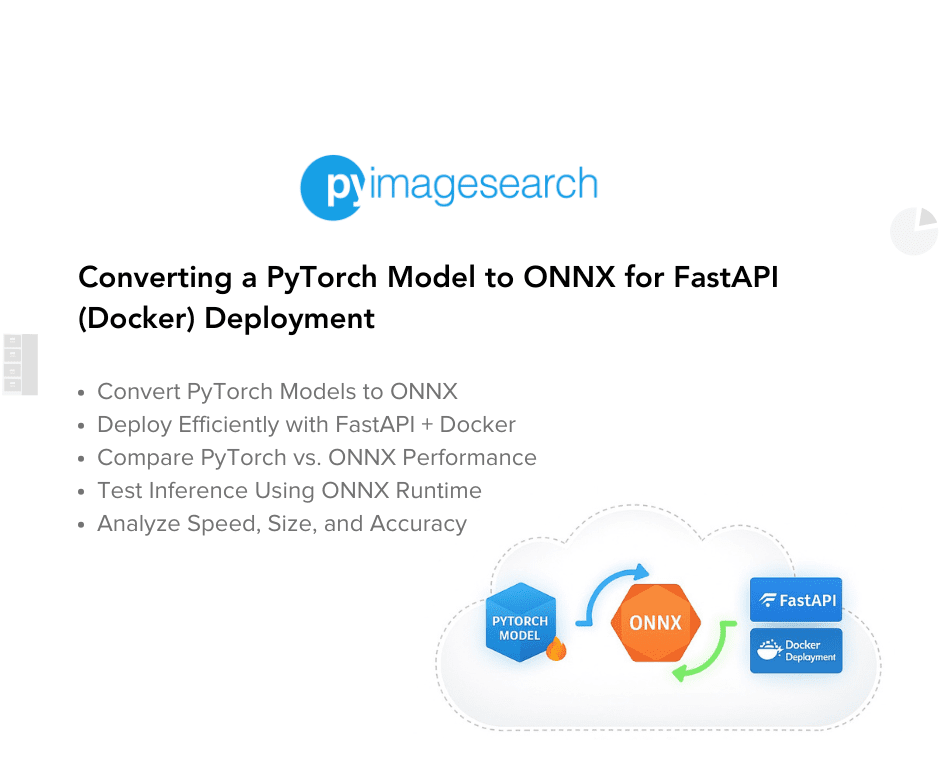

"In this lesson, you will learn how to convert a pre-trained ResNetV2-50 model using PyTorch Image Models (TIMM) to ONNX, analyze its structure, and test inference using ONNX Runtime. We'll also compare inference speed and model size against standard PyTorch execution to highlight why ONNX is better suited for lightweight AI inference. This prepares the model for integration with FastAPI and Docker, ensuring environment consistency before deploying to AWS Lambda."

"Limited Memory: AWS Lambda supports up to 10GB of memory, which may not be enough for large models. Execution Time Limits: A single Lambda function execution cannot exceed 15 minutes. Cold Starts: If a Lambda function is inactive for a while, it takes longer to start up, slowing inference speed. Storage Restrictions: Lambda packages have a 250MB limit (including dependencies), making it crucial to optimize model size."

Convert a pre-trained ResNetV2-50 model from PyTorch Image Models (TIMM) into ONNX format, inspect the exported graph, and validate inference with ONNX Runtime. Benchmark inference latency and compare model file size and execution speed against native PyTorch to evaluate suitability for lightweight inference. Address AWS Lambda constraints including 10GB memory limit, 15-minute execution limit, cold start latency, and 250MB package size cap. Optimize model and dependencies to reduce footprint. Serve the optimized model via a FastAPI endpoint and containerize with Docker to ensure consistent environments before Lambda deployment.

Read at PyImageSearch

Unable to calculate read time

Collection

[

|

...

]