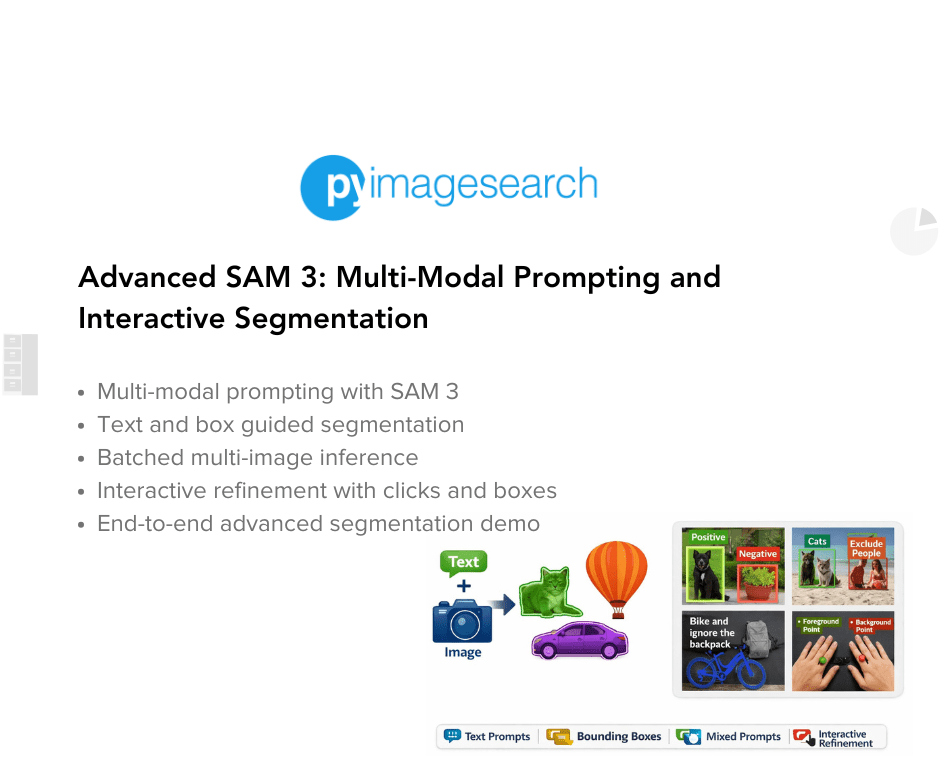

"SAM 3's true power lies in its flexibility; it does not just accept text prompts. It can process multiple text queries simultaneously, interpret bounding box coordinates, combine text with visual cues, and respond to interactive point-based guidance. This multi-modal approach enables sophisticated segmentation workflows that were previously impractical with traditional models. In Part 2, we will cover: Multi-prompt Segmentation: Query multiple concepts in a single image Batched Inference: Process multiple images with different prompts efficiently"

"Bounding Box Guidance: Use spatial hints for precise localization Positive and Negative Prompts: Include desired regions while excluding unwanted areas Hybrid Prompting: Combine text and visual cues for selective segmentation Interactive Refinement: Draw bounding boxes and click points for real-time segmentation control Each technique is demonstrated with complete code examples and visual outputs, providing production-ready workflows for data annotation, video editing, scientific research, and more."

"To learn how to perform advanced multi-modal prompting and interactive segmentation with SAM 3, just keep reading. Would you like immediate access to 3,457 images curated and labeled with hand gestures to train, explore, and experiment with ... for free? Head over to Roboflow and get a free account to grab these hand gesture images. To follow this guide, you need to have the following libraries installed on your system. !pip install --q git+https://github.com/huggingface/transformers supervision jupyter_bbox_widget"

SAM 3 enables advanced multi-modal segmentation by accepting simultaneous text queries, bounding box coordinates, visual cues, and interactive point guidance. Multi-prompt segmentation supports querying multiple concepts in a single image while batched inference processes many images with different prompts efficiently. Bounding box guidance and positive/negative prompts enable precise inclusion or exclusion of regions. Hybrid prompting combines text and visual inputs for selective segmentation. Interactive refinement allows real-time control via bounding boxes and click points. Complete code examples and visual outputs support production-ready workflows for data annotation, video editing, and scientific research. Required libraries include transformers, supervision, and jupyter_bbox_widget.

Read at PyImageSearch

Unable to calculate read time

Collection

[

|

...

]