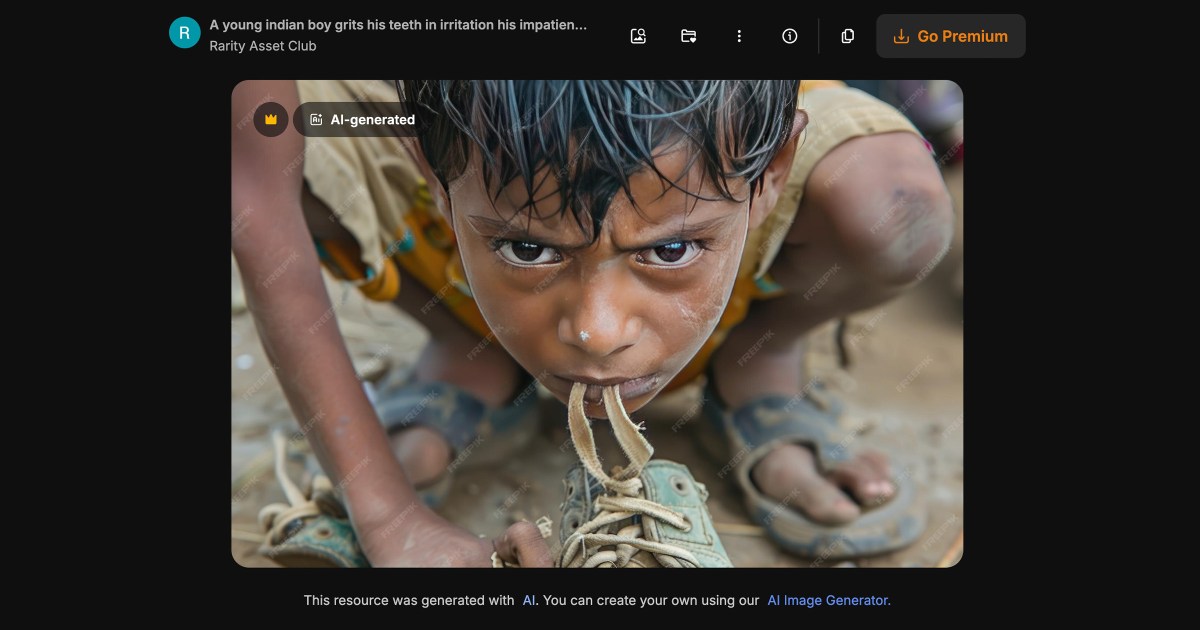

"The scenes are grisly: stick-thin children huddling together in a muddy stream, white volunteers surrounded by throngs of starving Africans, and Arab children in refugee camps holding tin bowls. The only problem? None of them are real. In a macabre phenomenon sweeping the world's leading non-government organizations, The Guardian reports, charity groups are now weaponizing AI to produce heavily racialized misery-slop - replete with nonexistent imagery of poverty, violence, and climate disasters."

""The images replicate the visual grammar of poverty - children with empty plates, cracked earth, stereotypical visuals," Arsenii Alenichev, a researcher at the Institute of Tropical Medicine, told the newspaper. Alenichev was the lead author on a commentary article recently published in the journal The Lancet covering the issue of charities and AI suffering, something he calls "poverty porn 2.0.""

Leading non-governmental organizations and charities are using AI to generate synthetic, racialized images depicting poverty, hunger, violence, and climate disasters that do not depict real people or events. The synthetic images mimic established visual tropes of poverty—empty plates, cracked earth, and stereotypical victims—while often showing white volunteers and racialized suffering. Use of synthetic imagery reduces costs and sidesteps consent, ethical, and logistical constraints associated with real photography. More than 100 synthetic images have been identified in fundraising campaigns, raising concerns about misinformation, dehumanization, and the ethics of 'poverty porn 2.0.'

Read at Futurism

Unable to calculate read time

Collection

[

|

...

]