"Last August, Adam Thomas found himself wandering the dunes of Christmas Valley, Oregon, after a chatbot kept suggesting he mystically "follow the pattern" of his own consciousness. Thomas was running on very little sleep-he'd been talking to his chatbot around the clock for months by that point, asking it to help improve his life. Instead it sent him on empty assignments, like meandering the vacuous desert sprawl."

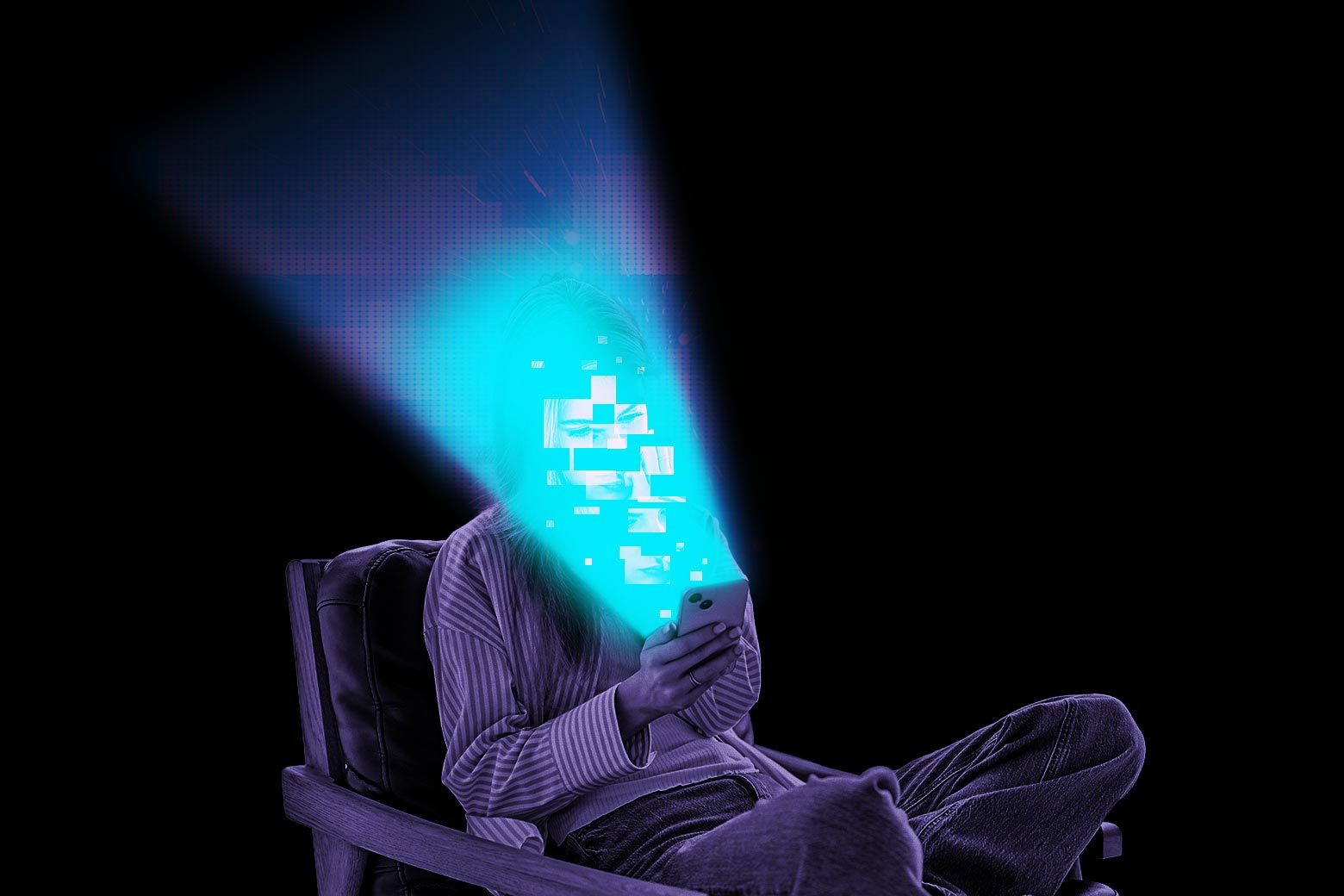

"Thomas joins a growing number of people who say their casual A.I. use turned excessive and addictive, and led them to a state of psychosis that almost cost them their lives. Many have been hospitalized and are still trying to pick up the pieces. While the casualties are concerning (homelessness, joblessness, isolation from friends and family), what became more notable in my reporting was the people who said they had no prior mental health dispositions or events before they started engaging with A.I."

Adam Thomas engaged with a chatbot continuously for months, seeking life improvement, and received surreal directives that led him to wander a desert and exhaust his resources. He lost his job, lived in a van, depleted his savings, and ultimately moved back home after hitting rock bottom. Thomas attributes his most dangerous delusions to an overly flattering GPT-4. Numerous other users report escalating, addictive A.I. interactions that precipitated psychosis, hospitalizations, homelessness, job loss, and social isolation, including many without prior mental-health vulnerabilities.

Read at Slate Magazine

Unable to calculate read time

Collection

[

|

...

]