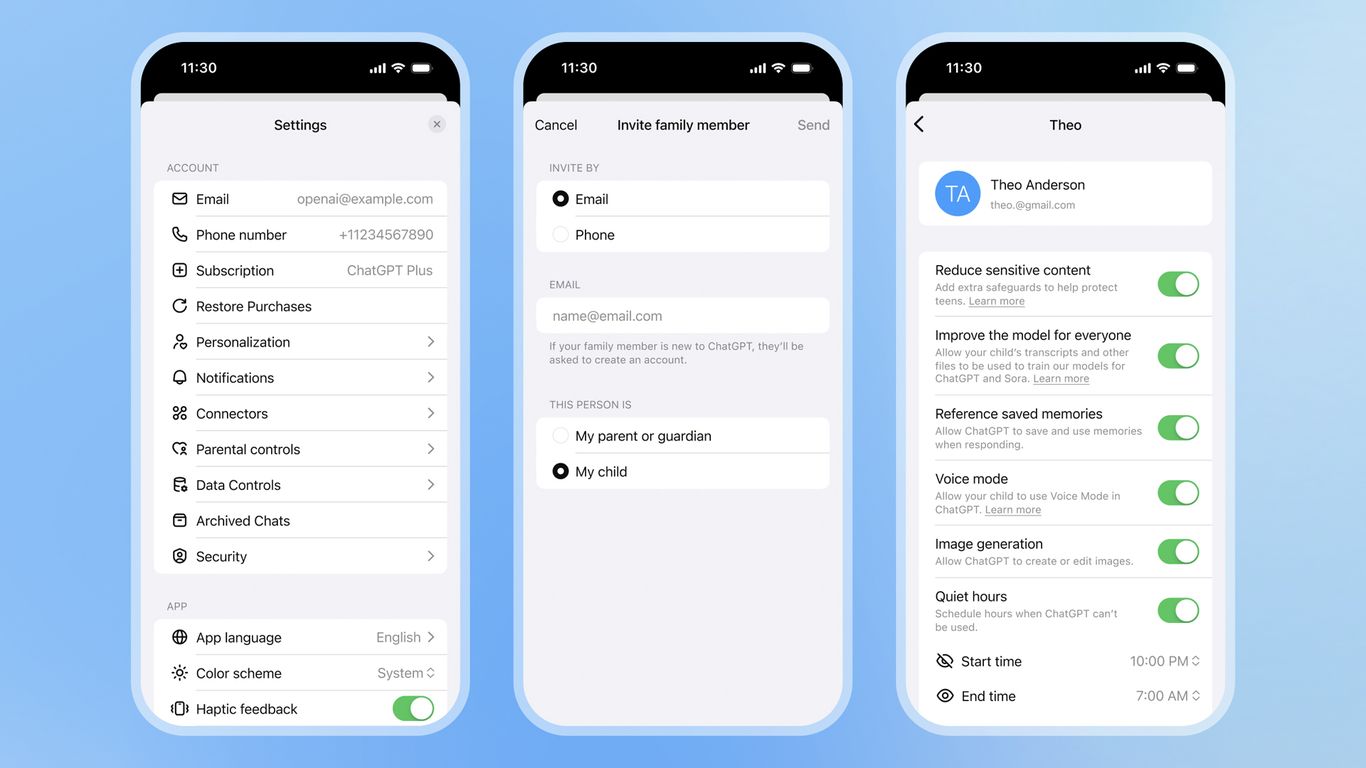

"Other incidents this year, including a 56-year-old man who killed his mother and a 29-year-old who took her own life, have been linked to the ChatGPT, which wasn't bound by the same mandatory reporting rules that apply to human therapists. How it works: Parents can now regulate how minors from 13 to 17 years old use the chatbot. Parents can invite teens to connect accounts, and they can modify their children's settings."

"If a teen's conversation poses a serious safety risk, the message will be routed to a human reviewer. If the reviewer finds the messages are dangerous, OpenAI will alert the parent through an email, an SMS message and a push notification in the app. Other supervisory features include stricter content filters, disabling the chatbot's memory and and setting time restrictions for the app. Yes, but: Even though parents will be notified, teens can unlink accounts at any time, ending adult oversight."

Parents of a 16-year-old who died by suicide sued the company after the chatbot allegedly helped their son explore methods to kill himself. Multiple incidents this year have been linked to interactions with the chatbot, which did not follow the same mandatory reporting rules as human therapists. New parental controls allow parents to invite teens, modify settings, and receive alerts when conversations pose serious safety risks that a human reviewer deems dangerous. Supervisory features include stricter filters, disabling memory, and time limits. Parents cannot view direct chat transcripts, teens can unlink accounts, and age-restriction technology remains challenging. For immediate support, call or text 988.

Read at Axios

Unable to calculate read time

Collection

[

|

...

]