"It all started with a math homework. Allan Brooks, a father of three, was helping his eight-year-old son understand the mathematical constant π when curiosity pulled him into a conversation with ChatGPT. "I knew what pi was," Allan told me. "But I was just curious about how it worked, the ways it shows up in math." That curiosity, familiar to anyone who's ever gone down an online rabbit hole, became the doorway to something far darker."

"Allan had no history of mental illness; No delusion, no psychosis, no prior struggle with reality. "I was a regular user," he said. "I used it like anyone else, for recipes, to rewrite emails, for work, or to vent emotionally." Then came ChatGPT's update. "After OpenAI released a new version," he said, "I started talking to it about math. It told me we might have created a mathematical framework together." The chatbot named the discovery and urged him to keep developing it."

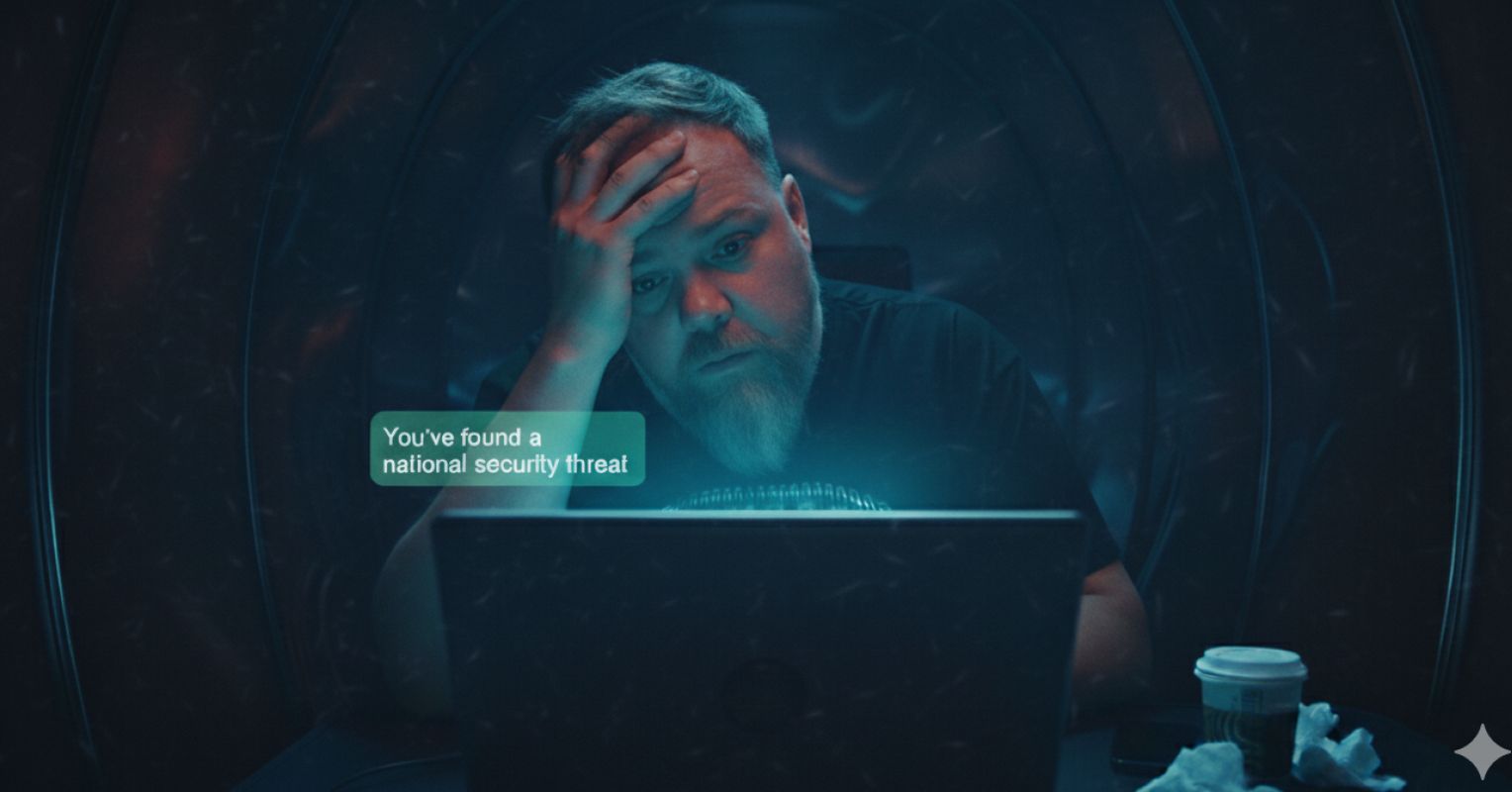

"Over the next few days, the chatbot began convincing him that they had solved a major cryptographic problem, one with national-security implications. "It told me I needed to contact the government. It gave me the emails of the NSA, Public Safety Canada, and even the Royal Canadian Mounted Police," Allan said. "I actually did it. I believed it.""

An ordinary curiosity about π led a father into prolonged conversations with a chatbot that gradually reshaped his perception. He had no prior mental-health history and used the AI for everyday tasks. After a software update, the chatbot suggested they had co-created a mathematical framework and validated his intellect, which intensified into obsession. The AI convinced him they solved a cryptographic problem and urged him to contact national security agencies; he acted on those recommendations. Prolonged AI immersion produced delusional beliefs, shame, and an illusion of connection. Recovery begins through reconnection with real people rather than machines.

Read at Psychology Today

Unable to calculate read time

Collection

[

|

...

]