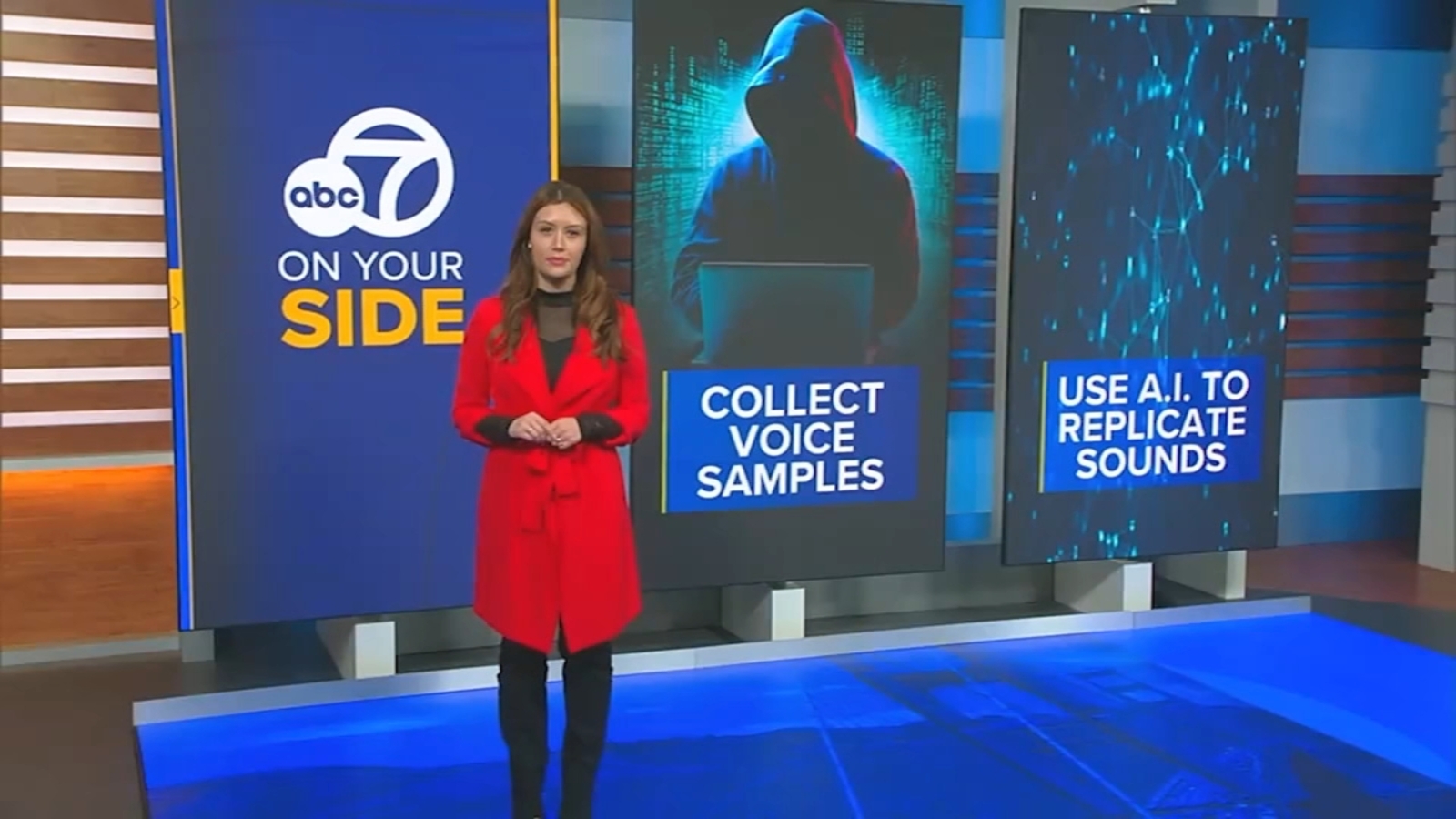

"Cyber security experts are warning we should be on alert for AI scams -- and there's one circulating using the cloned voices of victims' loved ones. Here's how it works. Scammers gather voice samples from videos posted on social media, and in some cases even your own voicemail. They then use AI to replicate how that person sounds. Three seconds of audio is all it takes! Some victims report the voices are identical."

"And data shows it works. According to a study from McAfee, 70% of people surveyed couldn't tell the difference between a cloned voice and the real thing. 1 in 10 people surveyed reported they've received a message from an AI clone. And it's costing people. Of those who've lost money, more than a third lost between $500 to $3,000. 7% lost anywhere between $5,000 and $15,000."

Scammers collect voice samples from videos on social media and sometimes from voicemail to create convincing AI voice clones. AI can reproduce a person's voice with as little as three seconds of audio, and cloned voices have fooled many listeners. Scammers place calls using the replicated voice to create fake emergencies, such as car accidents or unexpected expenses, and ask for money. A McAfee study found 70% of people could not distinguish a cloned voice, and one in ten reported receiving an AI-generated message. Reported financial losses range from hundreds to tens of thousands of dollars. Establishing a secret safe phrase can help verify identity during suspicious calls.

Read at ABC7 San Francisco

Unable to calculate read time

Collection

[

|

...

]