"On paper, the specifications look great. The AI HAT+ 2 delivers 40 TOPS (INT4) of inference performance. The Hailo-10H silicon is designed to accelerate large language models (LLMs), vision language models (VLMs), and "other generative AI applications." Computer vision performance is roughly on a par with the 26 TOPS (INT4) of the previous AI HAT+ model."

"Running it is a simple case of grabbing a fresh copy of the Raspberry Pi OS and installing the necessary software components. The AI hardware is natively supported by rpicam-apps applications. In use, it worked well. We used a combination of Docker and the hailo-ollama server, running the Qwen2 model, and encountered no issues running locally on the Pi. However, while 8 GB of onboard RAM makes for a nice headline feature, it seems a little weedy considering the voracious appetite AI applications have for memory."

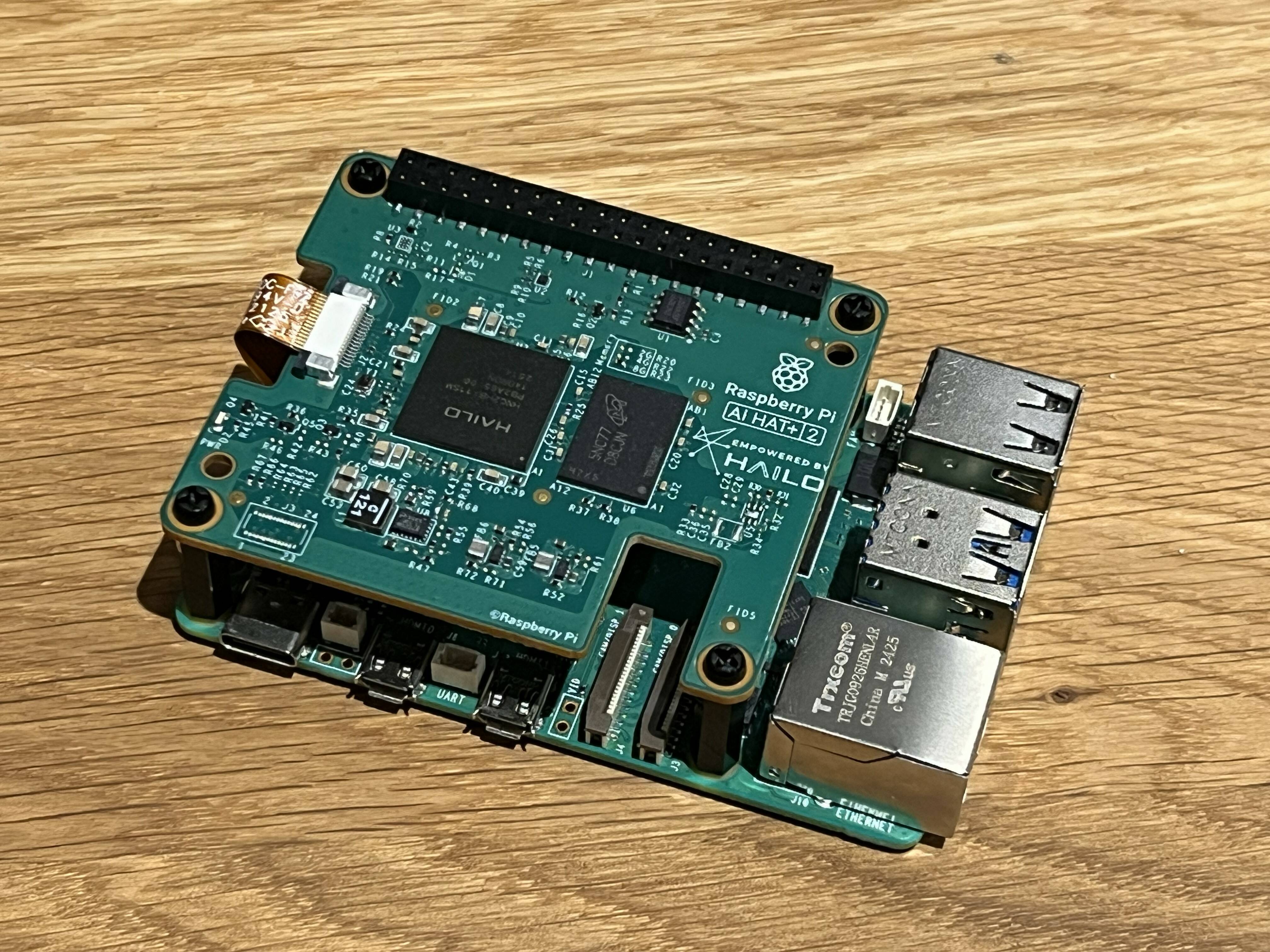

The AI HAT+ 2 integrates a Hailo-10H neural accelerator and 8 GB of onboard RAM, delivering 40 TOPS (INT4) aimed at LLMs, VLMs, and other generative AI tasks. Computer vision throughput remains roughly 26 TOPS (INT4), similar to the previous AI HAT+. The board connects via the Raspberry Pi GPIO and communicates over the Pi's PCIe interface. An optional passive heatsink is supplied but active cooling is recommended because the chips run hot. Native support exists in rpicam-apps, and Docker-based stacks such as hailo-ollama can run models like Qwen2 locally. The onboard RAM reduces host memory usage but may be insufficient for larger AI workloads, and pricing places the AI HAT+ 2 around $130 versus existing vision-focused options.

Read at Theregister

Unable to calculate read time

Collection

[

|

...

]