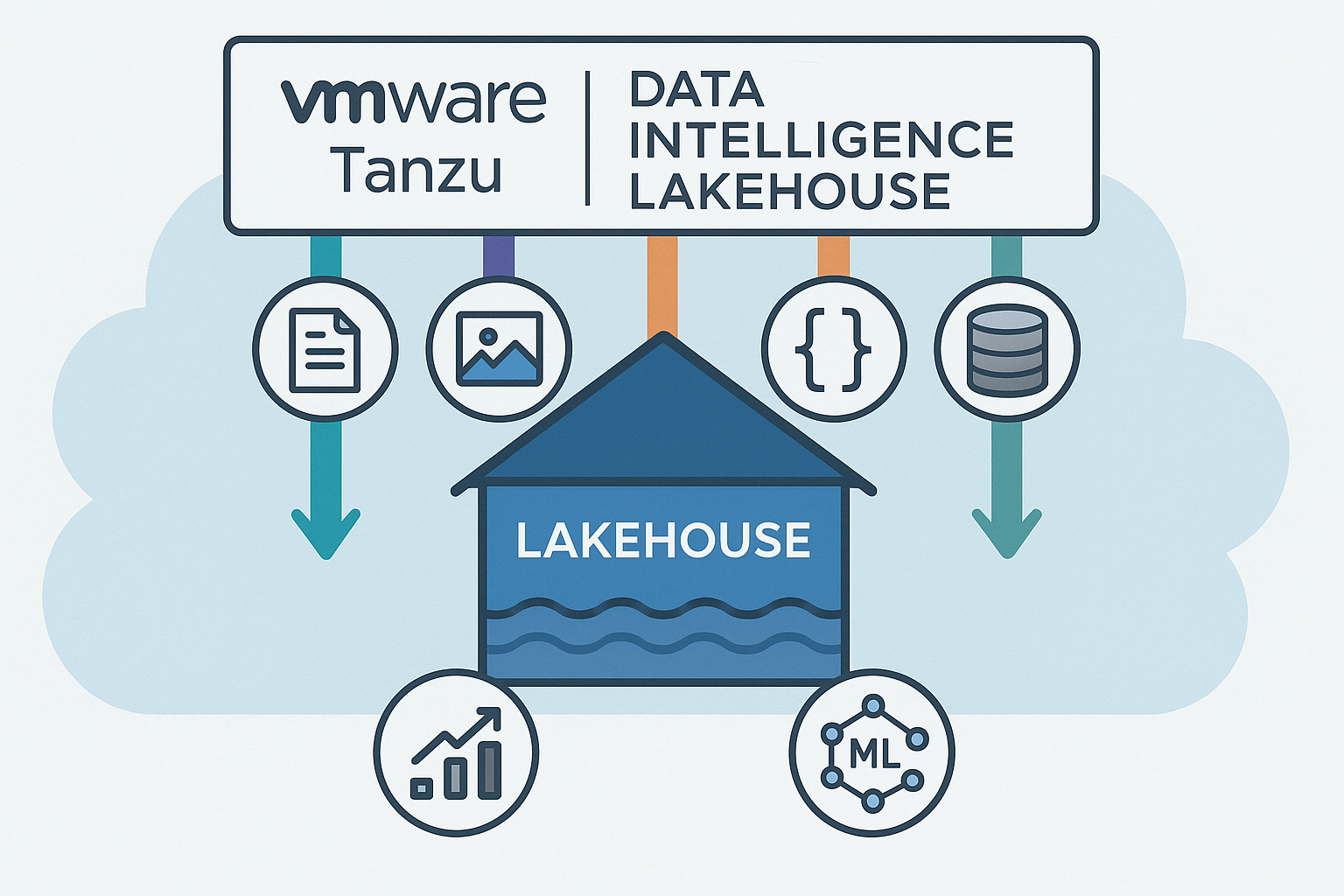

"Broadcom has launched Tanzu Data Intelligence, a new lakehouse platform that makes multimodal data accessible for AI applications. Together with Tanzu Platform 10.3, VMware aims to accelerate the development of AI agents on private clouds. According to Gartner, 30% of generative AI projects will be discontinued before 2026 due to poor data quality, insufficient risk coverage, or unclear business value. VMware aims to address this issue with Tanzu Data Intelligence, a platform that brings structured and unstructured data together in a single lakehouse."

"said Purnima Padmanabhan, vice president and general manager of the Tanzu division at Broadcom. Many organizations have already invested in application platforms and SaaS tooling to modernize their data strategy. This has not always had the desired result, due to new data silos and additional high costs to transfer data between different environments. With Tanzu Data Intelligence, all that data should be managed in-house and on-premises."

"The core of Tanzu Data Intelligence is built on an enterprise-grade lakehouse architecture that is suitable for processing large-scale workloads with high performance, flexibility, and governance. The platform scales from terabytes to petabytes, with millisecond latency and support for large numbers of users and APIs simultaneously. In addition, the lakehouse has five specialized components: Ingestion and Workflow Orchestration: Stream and batch pipelines enable seamless movement and transformation of data from multiple sources, supporting real-time dataflows and event-driven architectures."

Tanzu Data Intelligence is an enterprise lakehouse platform that unifies structured and unstructured multimodal data for AI workloads on private clouds. The platform scales from terabytes to petabytes, delivers millisecond latency, supports high concurrency and APIs, and emphasizes governance. It includes ingestion and workflow orchestration for stream and batch pipelines, federated query services for unified access, and additional specialized components for AI readiness and workload optimization. The offering targets reduction of data silos and transfer costs by keeping data managed in-house and on-premises, aiming to improve data quality, risk coverage, and business value for generative AI projects.

Read at Techzine Global

Unable to calculate read time

Collection

[

|

...

]