"Ollama simplifies the process of running LLM models locally, enabling users to extract structured data effortlessly, as demonstrated with Python documentation PDFs."

"To extract structured data from the Python Manuals, we need to define specific data classes that encapsulate the required information about modules, classes, and methods."

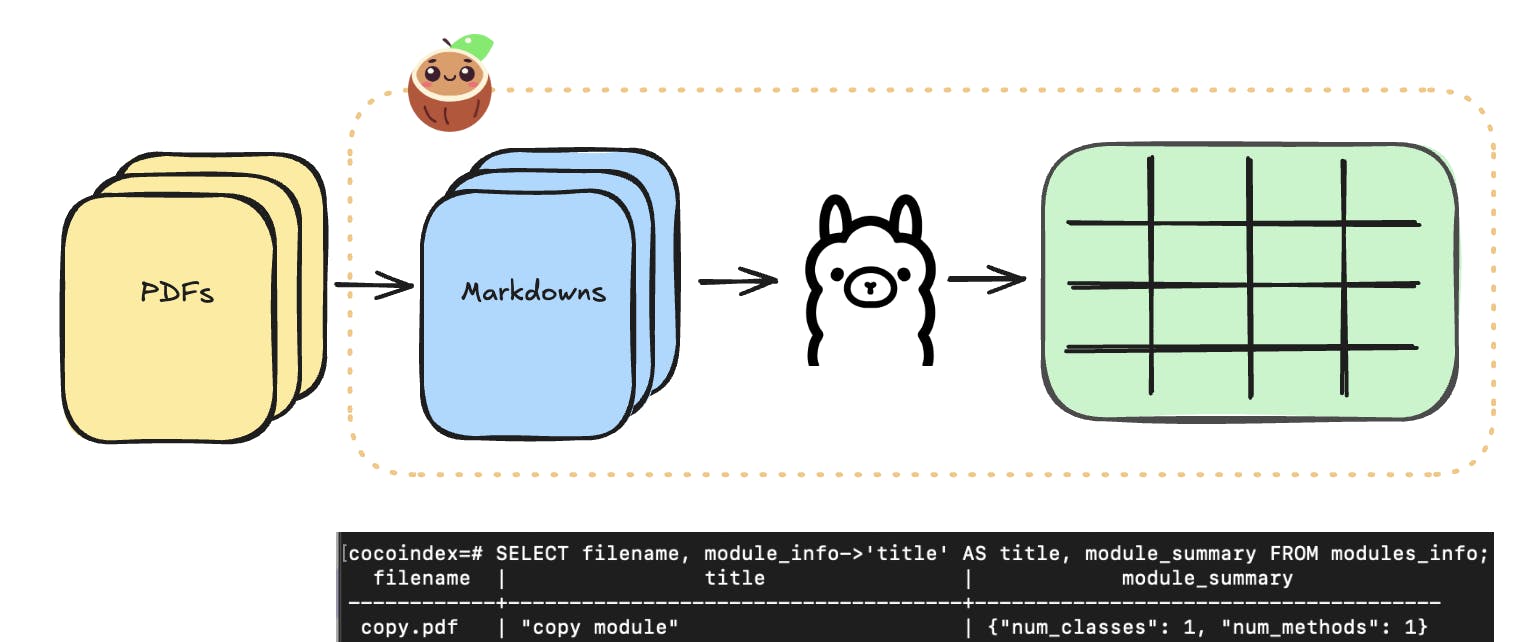

"The cocoIndex flow allows users to easily set up a structured extraction process from markdown files, focusing on the automation of data handling."

"With just about 100 lines of code, the capabilities of Ollama and cocoIndex showcase how accessible and effective local LLM model implementations can be."

This blog introduces Ollama, a tool for running LLM models on local machines and deploying them on the cloud. The core example focuses on extracting structured data from Python documentation PDFs, highlighting the process of defining data classes to encapsulate module, class, and method information. Users can easily initiate data extraction flows using cocoIndex, facilitating automated handling of markdown files. The entire process is powered by minimal Python code, making it accessible for users looking to work with structured data extraction.

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]