"Anthropic launched its newest model, Claude Opus 4.5, putting the company back atop the benchmark rankings for AI software coding. Opus 4.5 scores over 80% on the widely-used SWE-bench, which tests models for software engineering skill. Google's impressive Gemini 3 Pro, launched last week, briefly held the top score with 76.2%. Anthropic's Claude product lead Scott White tells Fast Company that the model has also scored higher than any human on the engineering take-home assignment the company gives to engineering job candidates."

"Of course Opus 4.5 does a lot more than coding. Anthropic says Opus 4.5 is also the "best model in the world" for powering AI agents and for operating a computer, and that it's meaningfully better than other models at tasks like deep research and working with slides and spreadsheets. Opus 4.5 also notched state-of-the-art (best) scores in several other key benchmarks, including Agentic coding SWE-bench Verified, Agentic tool use T-2 bench, and Novel problem solving ARC-AGI-2."

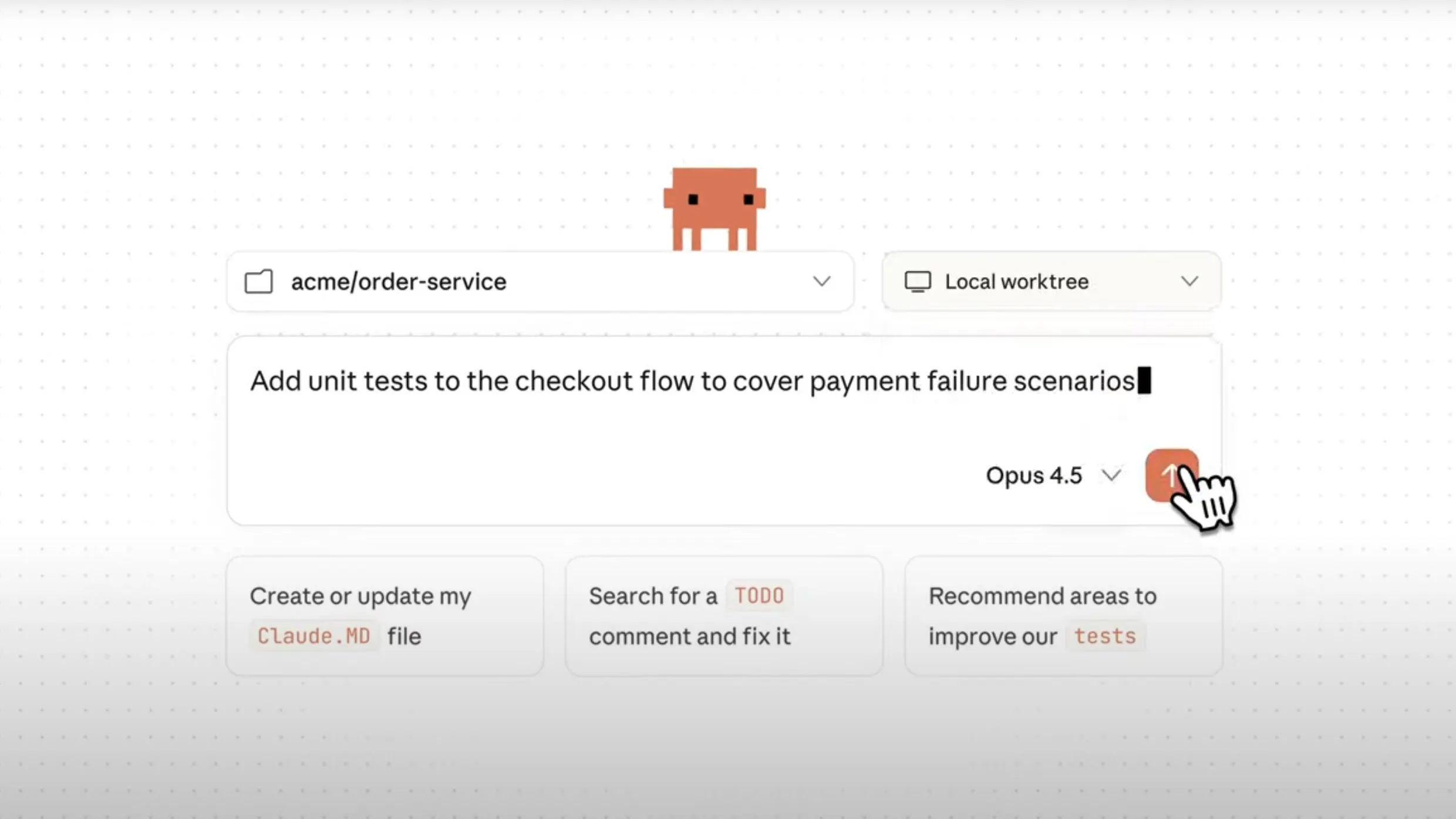

Anthropic launched Claude Opus 4.5, which scores over 80% on the SWE-bench and reclaimed the top coding benchmark position. The model reportedly scored higher than any human on the company's engineering take-home assignment. Anthropic positions Opus 4.5 as leading for powering AI agents, operating a computer, deep research, and working with slides and spreadsheets. Opus 4.5 achieved state-of-the-art results on Agentic coding SWE-bench Verified, Agentic tool use T-2 bench, and ARC-AGI-2. Customers report improved handling of uncertainty, better trade-off decisions, and concrete gains in office automation, financial modeling, and Excel accuracy and efficiency. The model will roll out as a default option for higher-tier subscribers.

Read at Fast Company

Unable to calculate read time

Collection

[

|

...

]