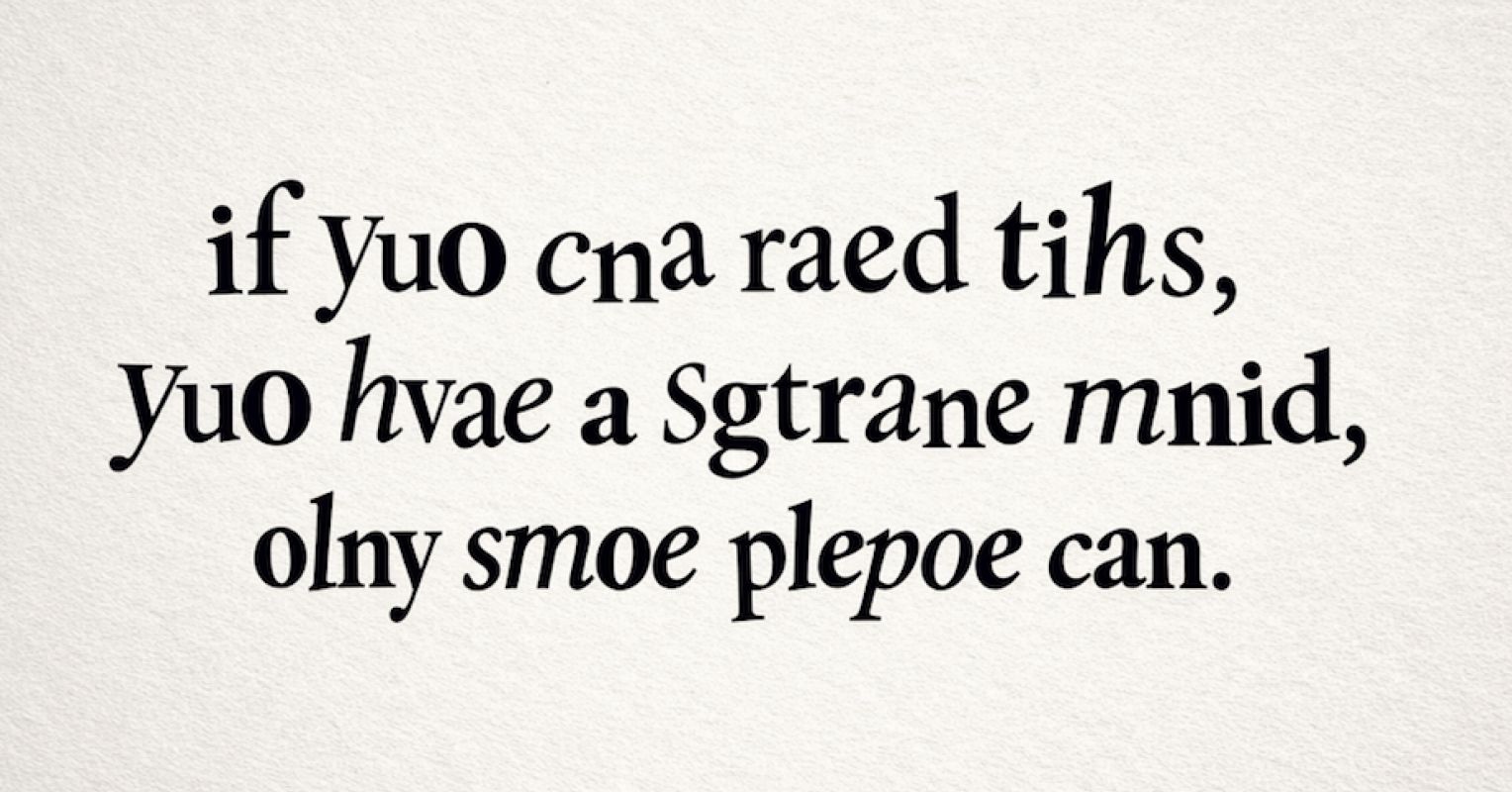

"A recent paper from the University of Wisconsin and the "Paradigms of Intelligence" Team at Google makes a fascinating claim. Large language models can extract and reconstruct meaning even when much of the semantic content of a sentence has been replaced with nonsense. Strip away the words, keep the structure, and the system still often knows what is being said. Freaky, right?"

"What's absent here is just as important as what remains. There is no world model or reference to lived experience that anchors this output. AI doesn't "know" what a dog, a promise, a danger, or a death actually is. Yet the output carries the same authority as genuine comprehension, at least on the surface. The key point here is that the system isn't reasoning toward meaning. It is navigating a hyperdimensional space of linguistic patterns and landing on the most probable completion."

Large language models can recover intended meaning when content words are replaced by invented tokens if grammatical structure is preserved. The models use syntax, position, and learned statistical expectations to infer plausible completions without access to a world model or lived experience. Outputs can therefore appear authoritative and fluent despite lacking true semantic grounding or reference to objects and events. The systems operate by navigating a high-dimensional space of linguistic patterns and selecting the most probable continuations. Pattern and form reveal the outward shape of intelligence while concealing an absence of genuine understanding.

Read at Psychology Today

Unable to calculate read time

Collection

[

|

...

]