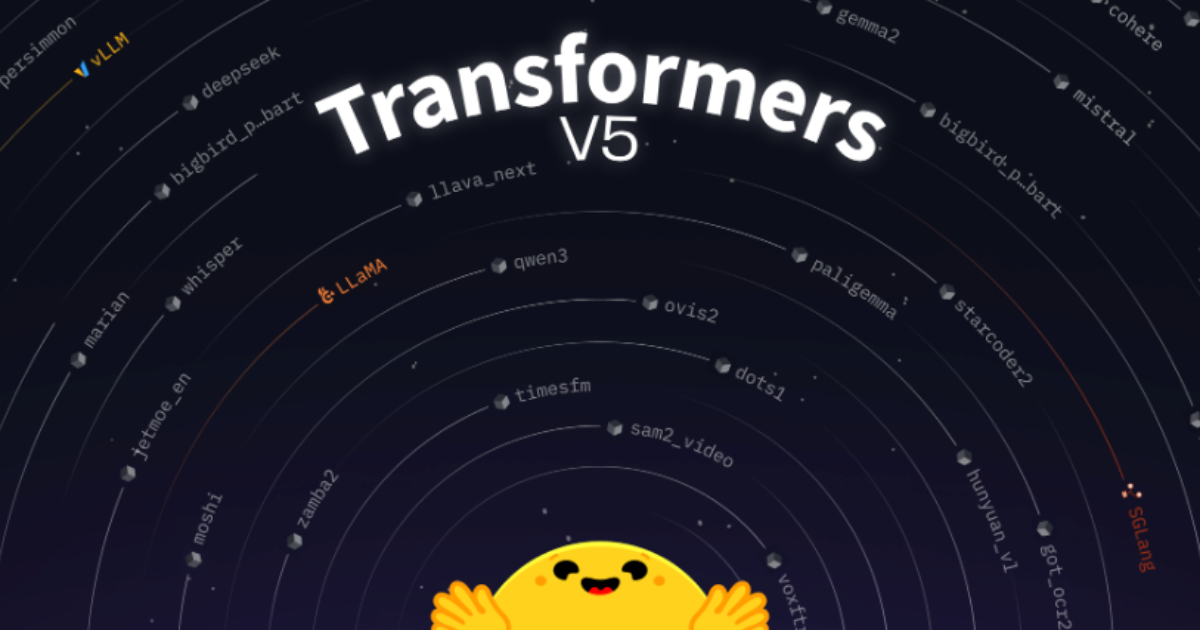

"Hugging Face has announced the first release candidate of Transformers v5. This marks an important step for the Transformers library, which has evolved significantly since the v4 release five years ago. It has transitioned from a specialized model toolkit to a key resource in AI development, currently recording over three million installations per day, with a total of more than 1.2 billion installs."

"A central theme of the release is simplification. Hugging Face has continued its move toward a modular architecture, reducing duplication across model implementations and standardizing common components such as attention mechanisms. The introduction of abstractions, such as a unified AttentionInterface, allows alternative implementations to coexist cleanly without bloating individual model files. This makes it easier to add new architectures and maintain existing ones."

"Transformers v5 also narrows its backend focus. PyTorch is now the primary framework, with TensorFlow and Flax support being sunset in favor of deeper optimization and clarity. At the same time, Hugging Face is working closely with the JAX ecosystem to ensure compatibility through partner libraries rather than duplicating effort inside Transformers itself. On the training side, the library has expanded support for large-scale pretraining. Model initialization and parallelism have been reworked to integrate more cleanly with tools like Megatron, Nanotron, and TorchTitan,"

Hugging Face released the first release candidate of Transformers v5, marking a major structural update after five years since v4. The release targets long-term sustainability and interoperability so that model definitions, training workflows, inference engines, and deployment targets interoperate with minimal friction. The release emphasizes simplification and a modular architecture, reducing duplication and standardizing components like attention via abstractions such as a unified AttentionInterface. PyTorch becomes the primary backend as TensorFlow and Flax support are being sunset, while compatibility with JAX is pursued via partner libraries. Training support expanded for large-scale pretraining, with initialization and parallelism reworked for tools like Megatron and TorchTitan.

Read at InfoQ

Unable to calculate read time

Collection

[

|

...

]