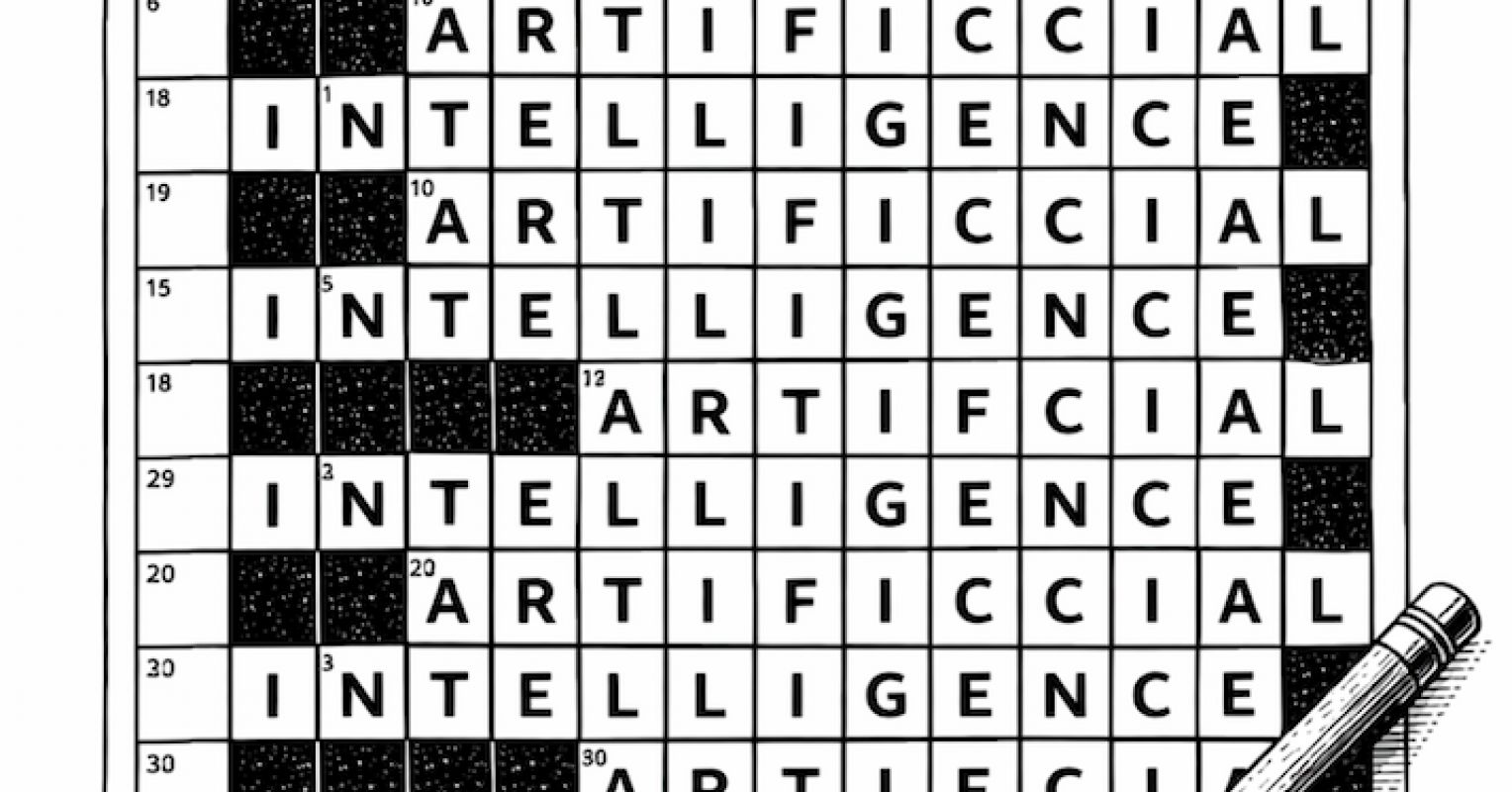

"One of the strangest things about large language models is not what they get wrong, but what they assume to be correct. LLMs behave as if every question already has an answer. It's as if reality itself is always a kind of crossword puzzle. The clues may be hard, the grid may be vast and complex, but the solution is presumed to exist. Somewhere, just waiting to be filled in."

"When an LLM gives a confident answer that turns out to be wrong, it isn't lying in the human sense. It is acting out its core assumption that an answer must exist, that every prompt points to a fillable blank, and that the shape of an answer implies the reality of one. The essential observation is that LLMs mistake the form of completion for the existence of truth."

Large language models treat prompts as incomplete patterns to be filled, presuming that answers already exist. The models prioritize fluent completion over hesitation, producing confident outputs even when uncertain. Humans can inhabit not-knowing, feeling gaps and living within unresolved questions, which can be valuable rather than a flaw. Language models cannot authentically represent doubt; they interpolate toward completion and thus risk mistaking the form of a complete answer for actual truth. Protecting the value of wonder requires embracing questions that may never resolve instead of forcing premature closure.

Read at Psychology Today

Unable to calculate read time

Collection

[

|

...

]