"It is a seductive idea, especially in a world where software projects are notorious for moving slowly. But inside large companies, that vision is already starting to unravel. What looks impressive in a demo often falls apart in the real world. As soon as AI-generated code runs into actual enterprise data, the problems show up. Schemas clash, governance breaks down, and a supposed breakthrough can quickly turn into a liability."

""Coding agents tend to break down when they're introduced to complex enterprise constraints like regulated data, fine-grained access controls, and audit requirements," Sridhar Ramaswamy, CEO of Snowflake, tells Fast Company. He says most coding agents are built for speed and independence in open environments, not for reliability inside tightly governed systems. As a result, they often assume they can access anything, break down when controls are strict, and cannot clearly explain why they ran a certain query or touched a specific dataset."

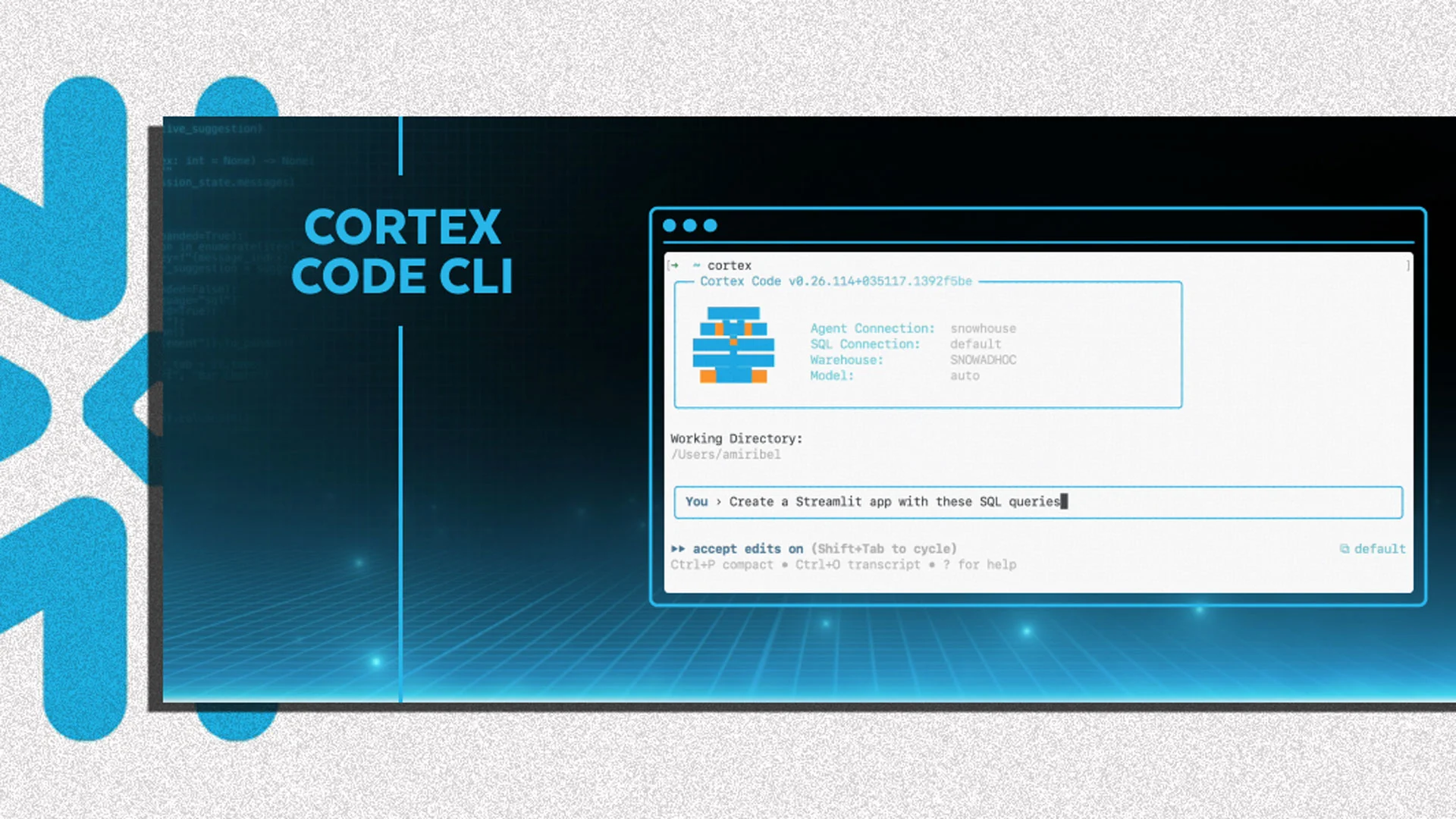

AI coding agents are receiving heavy attention across startups and big tech, promising to build software from simple descriptions. In enterprise settings these agents often fail when confronted with real data: schemas clash, governance breaks down, and they become liabilities. Many agents assume unrestricted access, break under fine-grained controls, and cannot explain queries or dataset access. Gartner predicts 40% of agentic AI projects will be canceled by 2027 for lack of governance, and only 5% of custom enterprise AI tools will reach production. Companies must prioritize secure, transparent, and compliant code that ensures trust, accuracy, and accountability. Snowflake offers Cortex Code as a data-native coding agent built to operate within governed data environments.

Read at Fast Company

Unable to calculate read time

Collection

[

|

...

]