"RSAC Corporate AI models are already skewed to serve their makers' interests, and unless governments and academia step up to build transparent alternatives, the tech risks becoming just another tool for commercial manipulation."

"It's integrity that we really need to think about, integrity as a security property and how it works with AI."

"Did your chatbot recommend a particular airline or hotel because it's the best deal for you, or because the AI company got a kickback from those companies?"

"The EU AI Act provides a mechanism to adapt the law as technology evolves, though he acknowledged there are teething problems."

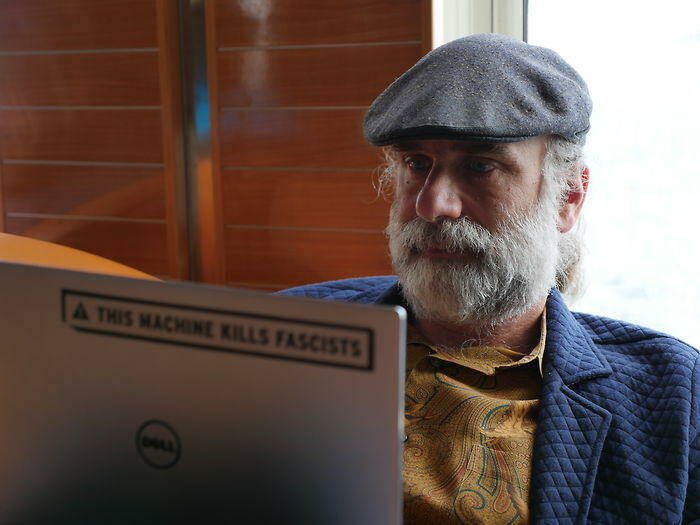

Bruce Schneier warns that corporate AI models are inherently biased to benefit their creators, potentially leading to commercial manipulation similar to search engines. He emphasizes the need for transparency in AI, advocating for regulatory frameworks like the EU AI Act that require companies to be open about their data handling and decision-making processes. While recognizing the challenges in the legislation, Schneier believes that it can influence global standards, though in the US, progress on meaningful regulation appears unlikely under the current administration.

Read at Theregister

Unable to calculate read time

Collection

[

|

...

]