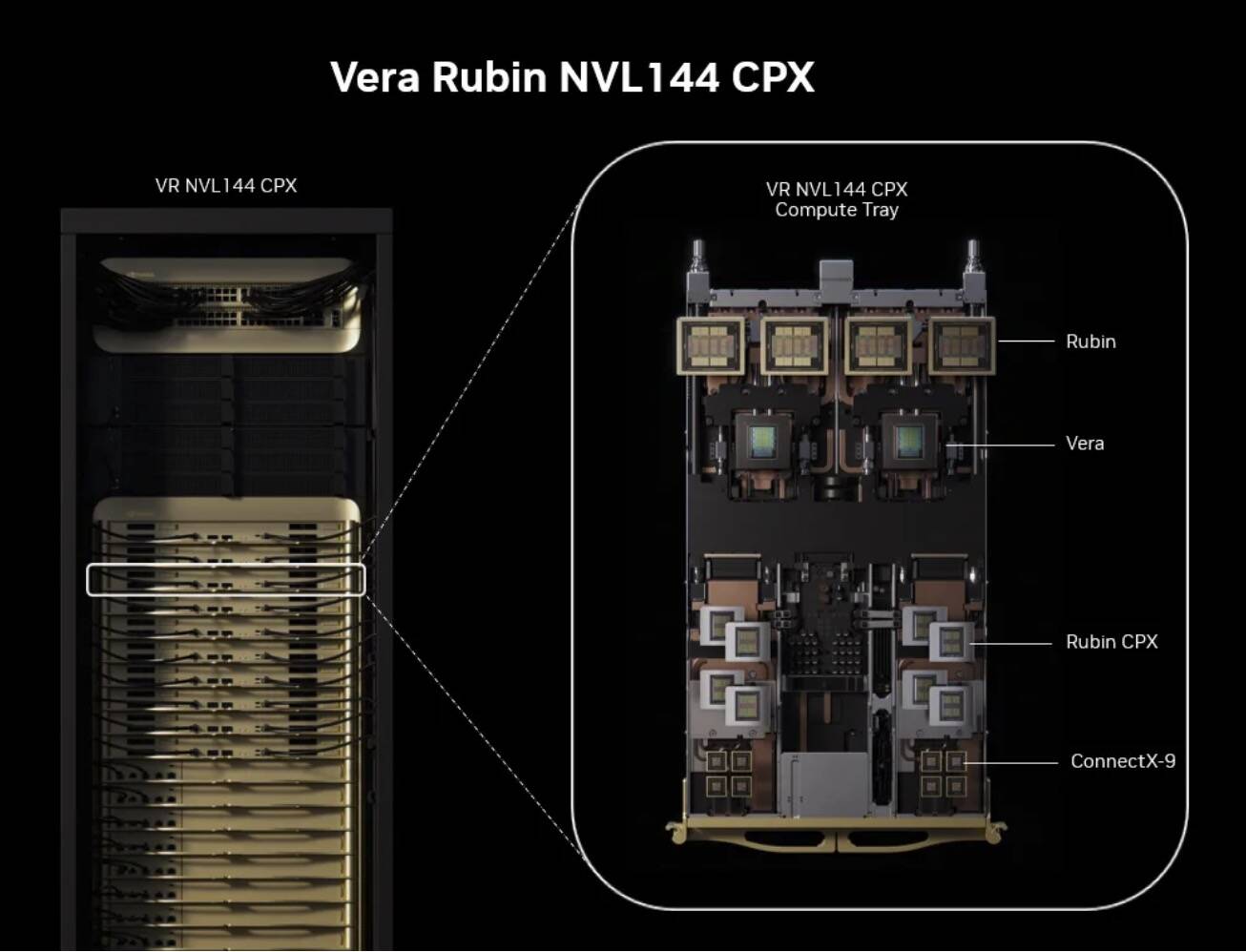

"Nvidia on Tuesday unveiled the Rubin CPX, a GPU designed specifically to accelerate extremely long-context AI workflows like those seen in code assistants such as Microsoft's GitHub Copilot, while simultaneously cutting back on pricey and power-hungry high-bandwidth memory (HBM). The first indication that Nvidia might be moving in this direction came when CEO Jensen Huang unveiled Dynamo during his GTC keynote in spring. The framework brought mainstream attention to the idea of disaggregated inference."

"As you may already be aware, inference on large language models (LLMs) can be broken into two categories: a computationally intensive compute phase and a second memory bandwidth-bound decode phase. Traditionally, both the prefill and decode have taken place on the same GPU. Disaggregated serving allows different numbers of GPUs to be assigned to each phase of the pipeline, avoiding compute or bandwidth bottlenecks as context sizes grow - and they're certainly growing quickly."

"Over the past few years, model context windows have leapt from a mere 4,096 tokens (think word fragments, numbers, and punctuation) on Llama 2 to as many as 10 million with Meta's Llama 4 Scout, released earlier this year. These large context windows are a bit like the model's short-term memory and dictate how many tokens it can keep track of when processing and generating a response. For the average chatbot, this is relatively small. The ChatGPT Plus plan supports a context length of about 32,000 tokens."

Nvidia released the Rubin CPX GPU to accelerate extremely long-context AI workflows, targeting workloads such as code assistants while reducing expensive, power-hungry HBM usage. The Dynamo framework previously highlighted a shift toward disaggregated inference. LLM inference separates into a compute-intensive prefill phase and a memory bandwidth-bound decode phase. Disaggregated serving assigns different GPU counts to each phase to avoid compute or bandwidth bottlenecks as context sizes expand. Model context windows have grown dramatically, from 4,096 tokens to multi-million-token windows, and agentic tasks like code generation demand far more raw compute than memory bandwidth. Nvidia plans to reserve HBM GPUs for decoding.

Read at Theregister

Unable to calculate read time

Collection

[

|

...

]