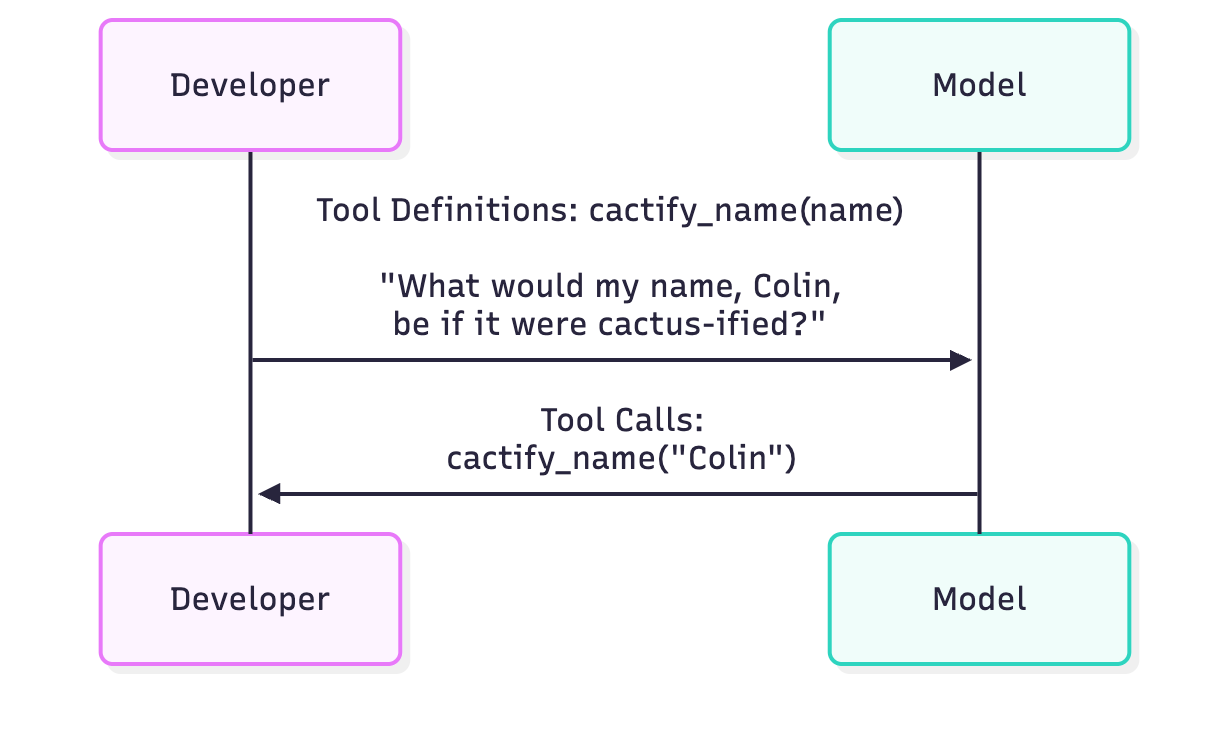

"We may want the model to query a database, call an external API, or perform calculations. Function calling (also known as tool calling) allows you to define functions (with schemas) that the model can call as part of its response. For us, this seems like a first step towards enabling more complex interactions with LLMs, where they can not only generate text but also perform actions based on that text."

"This returns a response that includes a function_call object: Reviewing the output, we see that the model decided to call our cactify_name function with the argument "Colin". The model itself doesn't actually execute the function. It simply returns the function call in its response. It's up to us to handle the function execution and return the result if needed. It's easier to see this in action using the Python SDK."

Define functions with a JSON Schema to expose tools the model can call. Pass the function schemas to the API (tools parameter) so the model can choose to call them. The model returns a function_call object describing the chosen function and arguments but does not execute the function. The application must execute the function and provide the result back to the model if needed. A simple example is a Python function that 'cactifies' a name. Use curl to demonstrate raw API requests or the Python SDK for easier handling and inspection of the function_call object. Function calling enables LLMs to trigger database queries, external API calls, and computations.

Read at Caktusgroup

Unable to calculate read time

Collection

[

|

...

]