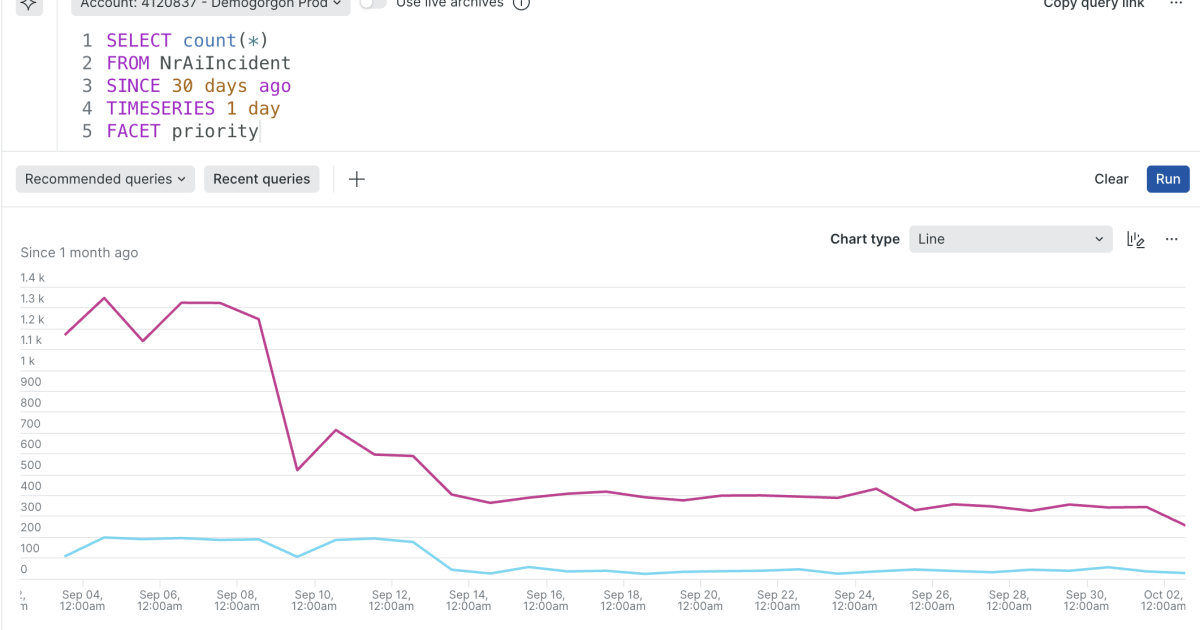

"This is where alert fatigue sets in. DevOps and SRE teams working with cloud workloads, microservices, and rapid deployments see hundreds of alerts triggered every day. Many are duplicates, some are irrelevant, and only a handful actually point to issues that demand attention. Result? When every alert screams 'critical', nothing feels urgent. Engineers spend hours triaging false positives, and important signals risk being buried. That delay directly increases mean time to resolution (MTTR), which ultimately means frustrated customers and financial loss for the business."

"The underlying issue is that traditional, threshold-based alerting wasn't designed for such dynamic environments. Static rules break when baselines shift constantly. To keep pace, teams need intelligent alerting that uses AI to cut through the noise, detect anomalies in real time, and surface what truly matters."

Modern applications generate large volumes of alerts across dashboards, logs, and monitoring systems, producing overwhelming noise rather than clear signals. DevOps and SRE teams encounter duplicate, irrelevant, or low-value alerts that consume hours of triage and obscure genuine incidents. Static, threshold-based alerting fails in dynamic environments because baselines shift and routine load can appear as spikes. Intelligent alerting leverages AI and machine learning to learn normal system behavior, detect anomalies in real time, reduce false positives and duplicates, prioritize actionable incidents, and accelerate mean time to resolution to reduce customer impact and financial loss.

Read at New Relic

Unable to calculate read time

Collection

[

|

...

]