"For this test, we're comparing the default models that both OpenAI and Google present to users who don't pay for a regular subscription- ChatGPT 5.2 for OpenAI and Gemini 3.2 Fast for Google. While other models might be more powerful, we felt this test best recreates the AI experience as it would work for the vast majority of Siri users, who don't pay to subscribe to either company's services."

"As in the past, we'll feed the same prompts to both models and evaluate the results using a combination of objective evaluation and subjective feel. Rather than re-using the relatively simple prompts we ran back in 2023, though, we'll be running these models on an updated set of more complex prompts that we first used when pitting GPT-5 against GPT-4o last summer."

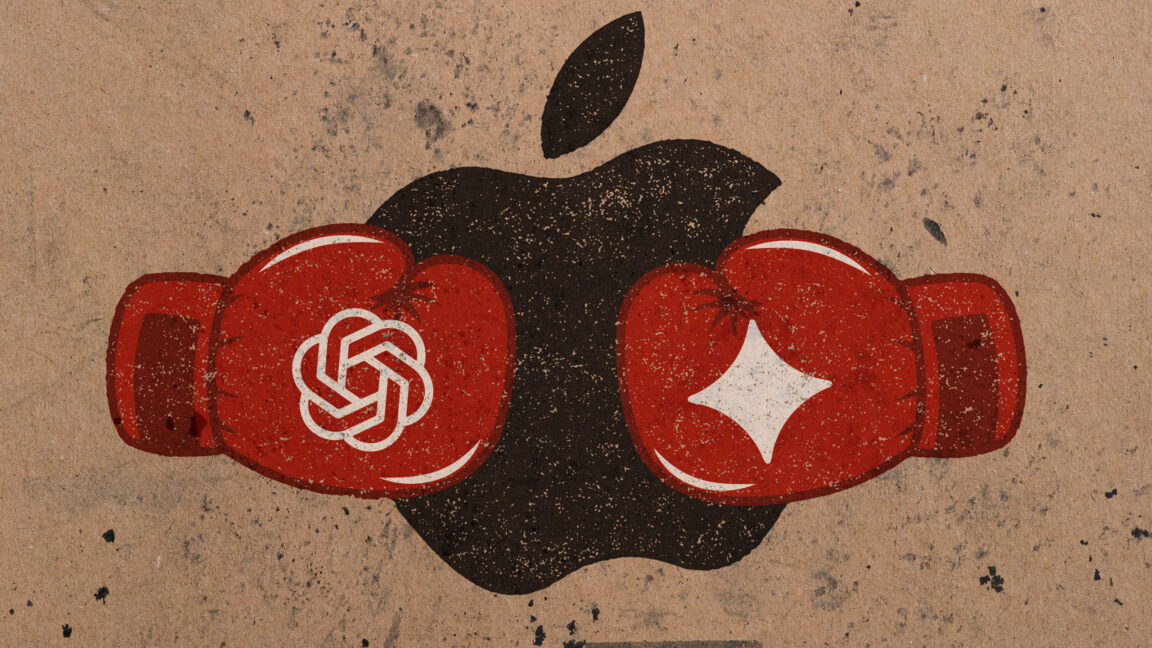

Previous comparative tests of OpenAI and Google models occurred in late 2023 when Google's offering was Bard. Apple partnered with Google Gemini to power the next generation of the Siri voice assistant. The comparison focuses on default, non-subscriber models: ChatGPT 5.2 and Gemini 3.2 Fast, representing the experience of most Siri users. Identical prompts were given to both models and results were judged using objective measures and subjective impressions. The prompt set used more complex tasks drawn from prior GPT-5 versus GPT-4o evaluations. Results reveal clear stylistic and practical differences, and difficulty producing truly original content, with Gemini returning verbatim jokes found online.

Read at Ars Technica

Unable to calculate read time

Collection

[

|

...

]