"The allure of large context windows in AI models promises vast data handling and perfect recall, but reality often diverges from these enticing features."

"Ultra-large context deployments face immense cost issues, as the price per query can skyrocket, complicating their implementation in production settings."

"Users increasingly expect verifiable results, but large-context models lack citation support, which can undermine trust and transparency in AI-generated responses."

"When workflows involve multiple LLM calls, large-context approaches can become impractical due to compounding costs and latency, hindering their utility."

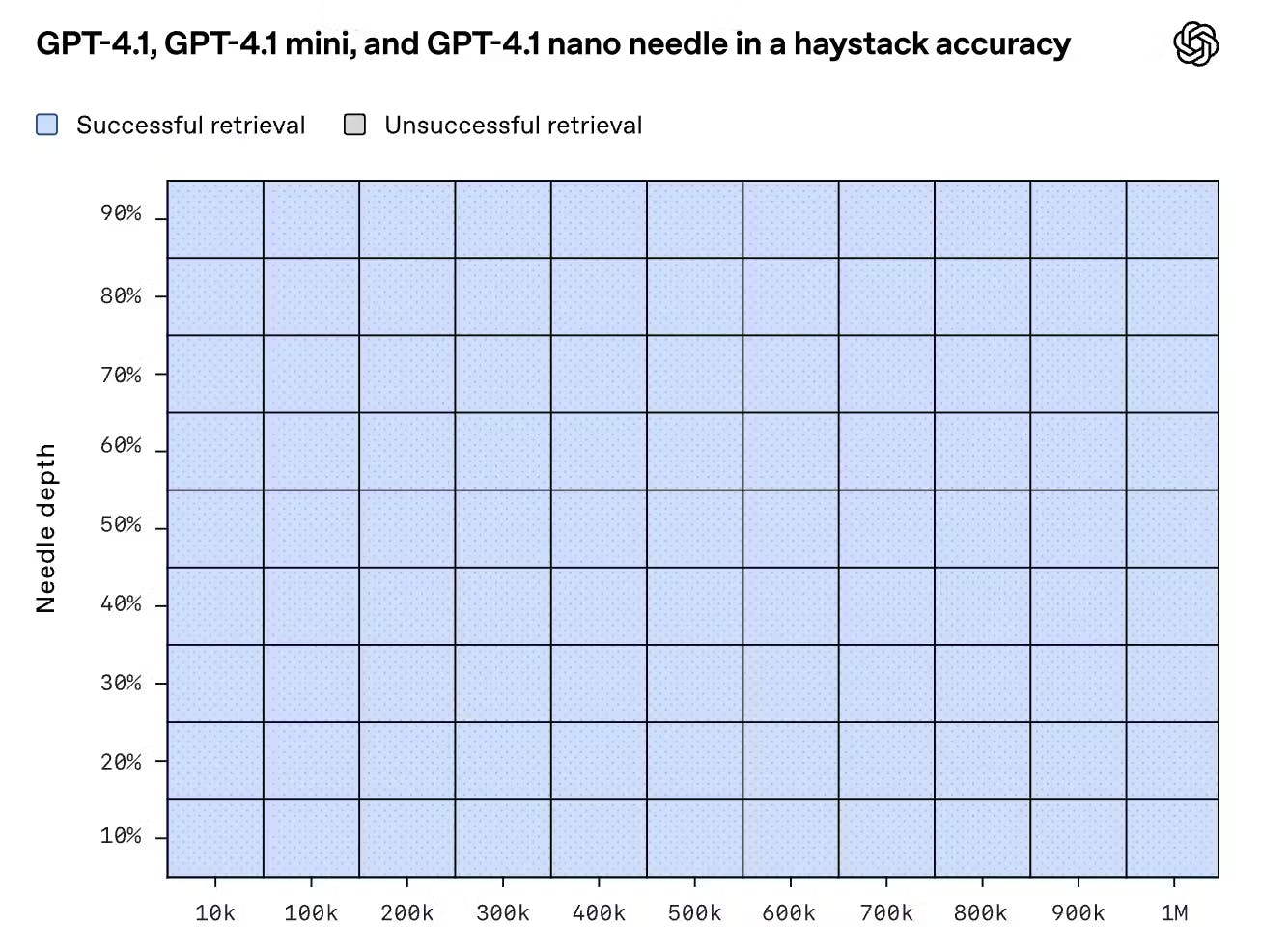

OpenAI's announcement of GPT-4.1 featuring a 1M-token context window faces skepticism regarding its practicality. While ultra-large context promises easy data handling and perfect recall, the costs, latency, and lack of citation support present significant barriers. High query costs can increase dramatically, from $0.002 to $2, creating challenges for production-scale workflows. As workflows grow more agentic, necessitating multiple LLM calls, the benefits of large context windows are diminished by these practical considerations, leading to doubts about their utility in real-world applications.

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]