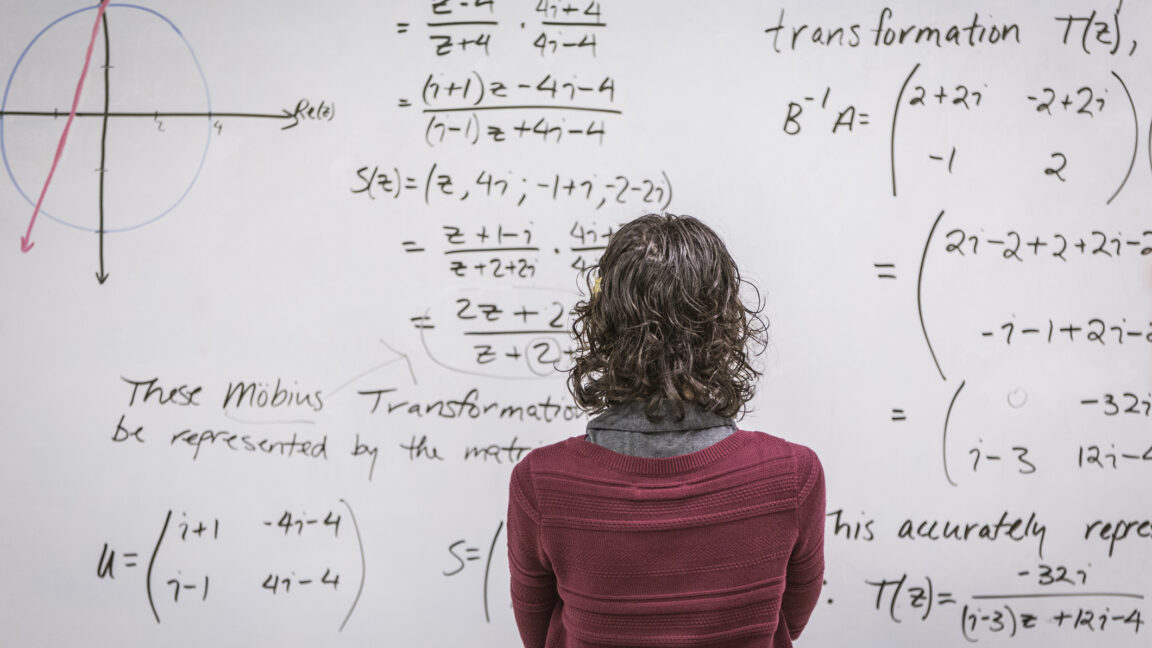

"The reason computers fared poorly in math competitions is that, while they far surpass humanity's ability to perform calculations, they are not really that good at the logic and reasoning that is needed for advanced math. Put differently, they are good at performing calculations really quickly, but they usually suck at understanding why they're doing them. While something like addition seems simple, humans can do semi-formal proofs based on definitions of addition"

"To perform a proof, humans have to understand the very structure of mathematics. The way mathematicians build proofs, how many steps they need to arrive at the conclusion, and how cleverly they design those steps are a testament to their brilliance, ingenuity, and mathematical elegance. "You know, Bertrand Russel published a 500-page book to prove that one plus one equals two," says Thomas Hubert, a DeepMind researcher and lead author of the AlphaProof study."

Computers excel at calculations but historically struggle with the logical reasoning required for advanced mathematics. Humans construct proofs by understanding mathematical structures, choosing steps, and sometimes using formal systems like Peano arithmetic. DeepMind developed AlphaProof to bridge this gap by combining large language models with formal proof assistants and a translator that maps informal math into formal representations. AlphaProof proposed proofs that were checked by automated verifiers and required occasional human guidance. AlphaProof matched silver-medalist performance at the 2024 International Mathematical Olympiad and nearly achieved gold-level results on the Putnam, signaling progress in automated theorem proving.

Read at Ars Technica

Unable to calculate read time

Collection

[

|

...

]