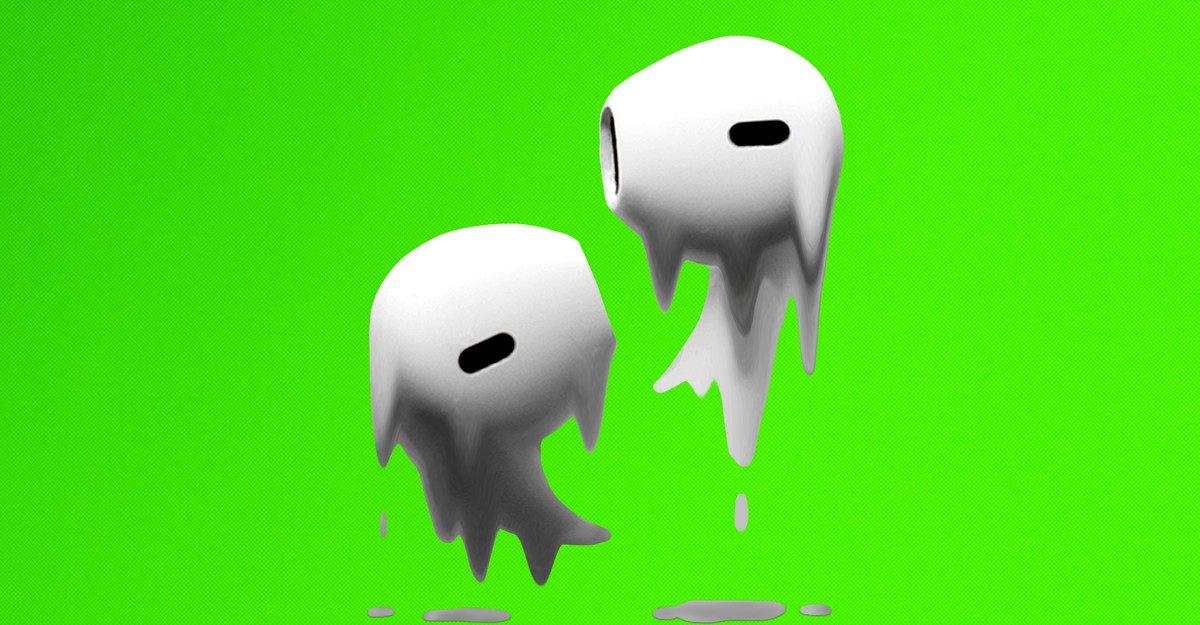

"Earlier this week, I stopped for breakfast in Sunset Park, Brooklyn, a largely Hispanic neighborhood where street vendors sell tamales and rice pudding out of orange Gatorade coolers. I speak some Spanish, but I wanted to test out Apple's new "Live Translation" feature, which has been advertised as a sort of interpreter in your ears. I popped in my AirPods, pulled up the Translate app, and approached."

"The vendor had already begun explaining her offerings to me in a mix of Spanish and English, but the AirPods drowned out most of her words. I asked a question, in English, about the tamale fillings, then realized that I had to press an on-screen "Play" button for it to be read aloud by my device in Spanish. The vendor smiled (or maybe grimaced) and then responded."

"That final word is neither translated nor a word, but I took it to mean guajillo, a kind of chili pepper. Slices seemed to be a misunderstanding of the Spanish word rajas, which does technically mean "slices" but has a regional meaning—as in rajas con crema, or strips of roasted poblano peppers with cream (and in this case, cheese or chicken)."

A person tested Apple's Live Translation using AirPods at a Sunset Park tamale vendor and encountered ambient-noise warnings and audible interruptions. The device interrupted speech with a loud system message and required an on-screen Play button to render translations aloud, creating awkward delays. Several translated words were incorrect or nonsensical, including misrendered food items and untranslated terms that obscured meaning. Overlapping live speech and lagging translations produced unintelligible fragments. The user relied on contextual knowledge to infer intended items and chose salsa verde to avoid potential mistranslated risks.

Read at The Atlantic

Unable to calculate read time

Collection

[

|

...

]