"The big picture: Patients see ChatGPT as an "ally" in navigating their health care, according to analysis of anonymized interactions with ChatGPT and a survey of ChatGPT users by the AI-powered tool Knit. Users turn to ChatGPT to decode medical bills, spot overcharges, appeal insurance denials, and when access to doctors is limited, some even use it to self-diagnose or manage their care."

"By the numbers: More than 5% of all ChatGPT messages globally are about health care. OpenAI found that users ask 1.6 to 1.9 million health insurance questions per week for guidance comparing plans, handling claims and billing and other coverage queries. In underserved rural communities, OpenAI says users send an average of nearly 600,000 health care-related messages every week. Seven in 10 health care conversations in ChatGPT happen outside of normal clinic hours."

"Zoom in: Patients can enter symptoms, prior advice from doctors, and context around their health-care issues and ChatGPT can deliver warnings on the severity of certain conditions. When care isn't available, this can help patients decide if they should wait for appointments or if they need to seek emergency care. "Reliability improves when answers are grounded in the right patient-specific context such as insurance plan documents, clinical instructions, and health care portal data," OpenAI says in the report."

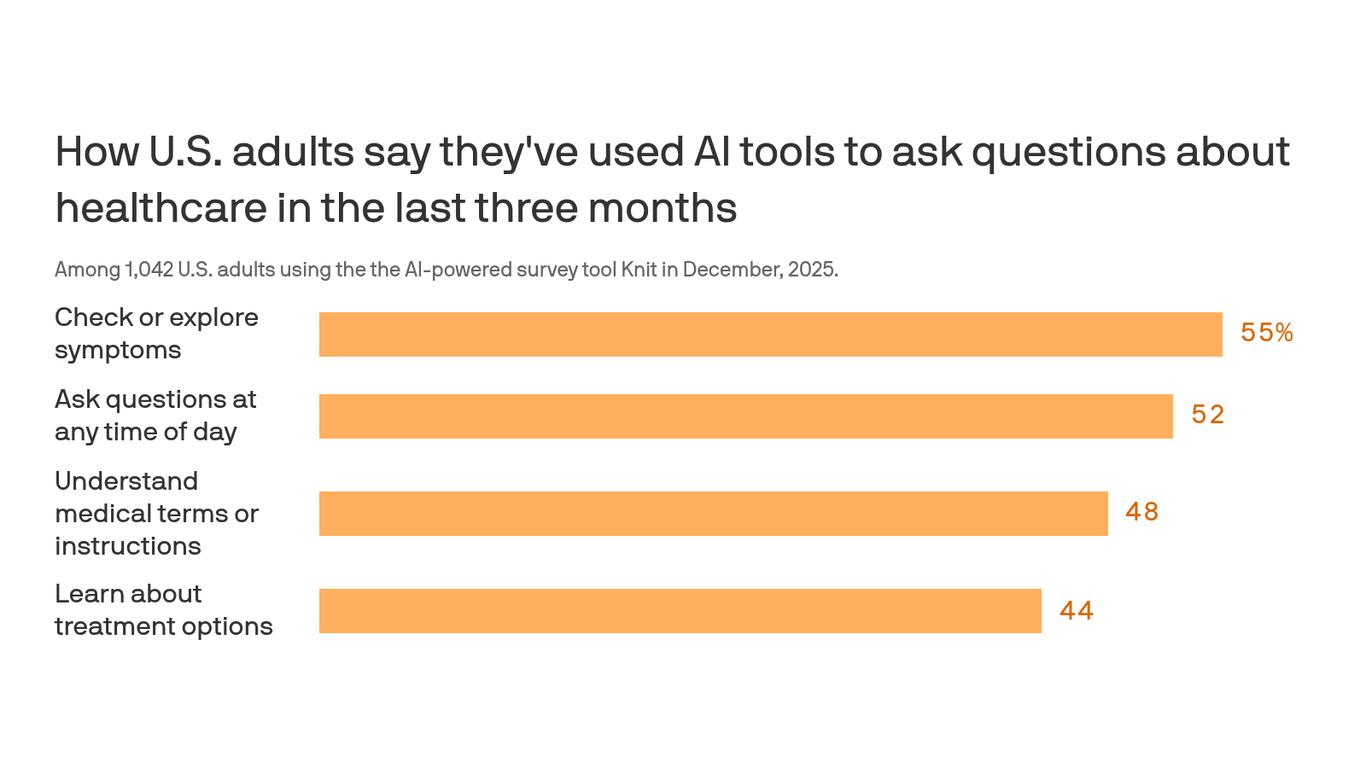

ChatGPT handles a substantial share of health-related inquiries, with more than 5% of global ChatGPT messages addressing health care. Users ask millions of health insurance questions weekly and rural communities send hundreds of thousands of health-care messages each week. Most health conversations occur outside normal clinic hours. Patients use ChatGPT to decode medical bills, spot overcharges, appeal denials, compare plans, and assess symptoms or treatment options when care access is limited. Reliability improves when answers incorporate patient-specific context such as insurance documents, clinical instructions, and portal data. Incorrect advice is particularly risky in mental health contexts, prompting lawsuits and state bans on AI mental-health decision-making.

Read at Axios

Unable to calculate read time

Collection

[

|

...

]