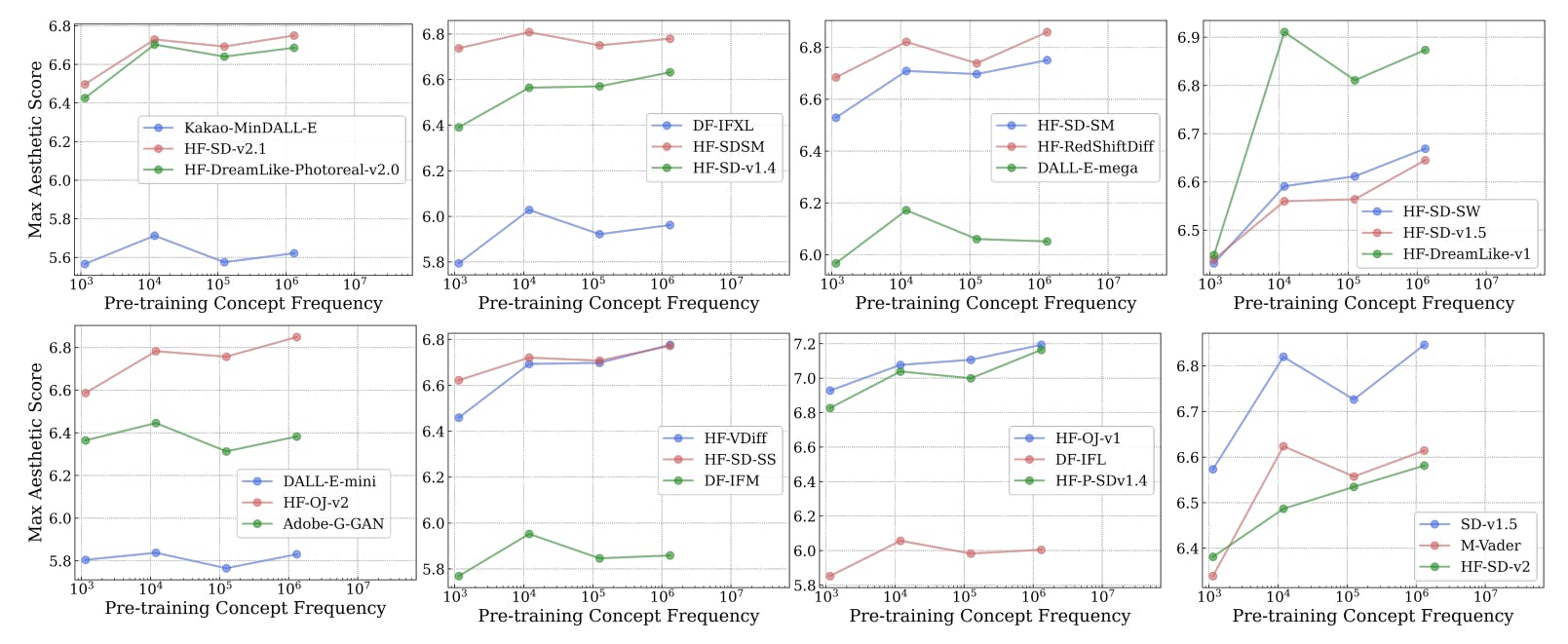

"Concept frequency serves as a significant predictor for the zero-shot performance of models. The statistical analysis shows that varying frequencies directly impact the model's predictive capabilities in a consistent manner, regardless of filtering data types."

"Results indicate that models showcasing greater concept frequencies demonstrate better performance in zero-shot tasks. This trend persists when examining independent image and text domains, reinforcing the relationship between training data frequency and model efficacy."

Concept frequency is a crucial indicator of model performance in zero-shot scenarios. Statistical evaluations show a strong log-linear correlation between the frequency of concepts and their effectiveness in predictions. This relationship remains consistent across various concepts derived from both image and text domains. Different experimental setups confirm that models with higher concept frequencies perform better in zero-shot tasks, highlighting the importance of integrating robust pretraining datasets for achieving optimal model results.

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]