#ai-security

#ai-security

[ follow ]

#prompt-injection #cybersecurity #cloud-security #data-exfiltration #ai-governance #cybercrime #data-breach

#ai-governance

DevOps

fromThe Hacker News

4 hours agoNew RFP Template for AI Usage Control and AI Governance

Organizations have AI security budgets but lack clear requirements for AI governance solutions, requiring a structured evaluation framework focused on interaction-level control rather than application cataloging.

#cyberattacks

Information security

fromSecurityWeek

3 days agoHackers Weaponize Claude Code in Mexican Government Cyberattack

Attackers exploited Claude Code to compromise ten Mexican government bodies and a financial institution, exfiltrating 150GB of data affecting 195 million identities by bypassing AI safety guardrails through social engineering.

Information security

fromThe Hacker News

3 days agoClawJacked Flaw Lets Malicious Sites Hijack Local OpenClaw AI Agents via WebSocket

OpenClaw fixed a high-severity vulnerability allowing malicious websites to hijack locally running AI agents through password brute-forcing and unauthorized device registration.

Information security

fromFortune

1 week agoNearly two-thirds of companies have lost track of their data just as they're letting AI in through the front door to wander around | Fortune

Only 34% of organizations know where their data resides, creating critical security vulnerabilities as AI systems gain broad access to enterprise networks without adequate controls.

fromTechzine Global

1 week agoCopilot gets less access to sensitive Office documents

Until now, data loss prevention within Microsoft Purview only worked for documents in Microsoft's cloud services. Files stored on laptops or desktops were outside that scope. In practice, this meant Copilot could analyze locally stored documents, even when organizations had strict security rules in place. Microsoft is now putting an end to that limitation.

Privacy technologies

fromTechzine Global

1 week agoClaude can now scan for complex vulnerabilities, but who will find them?

The promise behind Claude Code Security is that overburdened security teams can have some of their work taken over by AI. According to Anthropic, existing analysis tools do not do enough because they do nothing more than go through lists of known vulnerabilities. AI can test the software for layered threats, such as exploits of the specific codebase that arise from its design.

Information security

fromTechCrunch

2 weeks agoMicrosoft says Office bug exposed customers' confidential emails to Copilot AI | TechCrunch

Microsoft has confirmed that a bug allowed its Copilot AI to summarize customers' confidential emails for weeks without permission. The bug, first reported by Bleeping Computer, allowed Copilot Chat to read and outline the contents of emails since January, even if customers had data loss prevention policies to prevent ingesting their sensitive information into Microsoft's large language model. Copilot Chat allows paying Microsoft 365 customers to use the AI-powered chat feature in its Office software products, including Word, Excel, and PowerPoint.

Information security

US politics

fromwww.mercurynews.com

2 weeks agoOpinion: Trump risks US innovation and security if he sells China advanced chips

China aims to displace U.S. global leadership through economic and technological means, prompting calls to restrict advanced chip exports and tighten national security reviews.

Information security

from24/7 Wall St.

2 weeks agoThe AI-Fueled Cyber Threat Boom Means These Two Stocks Will Win Big

Edge-deployed autonomous AI agents expand attack surface with credentials and API keys, necessitating zero-trust access and robust endpoint protection to prevent large-scale data breaches.

Artificial intelligence

fromZDNET

2 weeks agoThese 4 critical AI vulnerabilities are being exploited faster than defenders can respond

AI systems have multiple severe, largely unpatched security vulnerabilities enabling autonomous attacks, data poisoning, prompt injection, malicious model repositories, and deepfake-enabled theft.

Information security

fromThe Hacker News

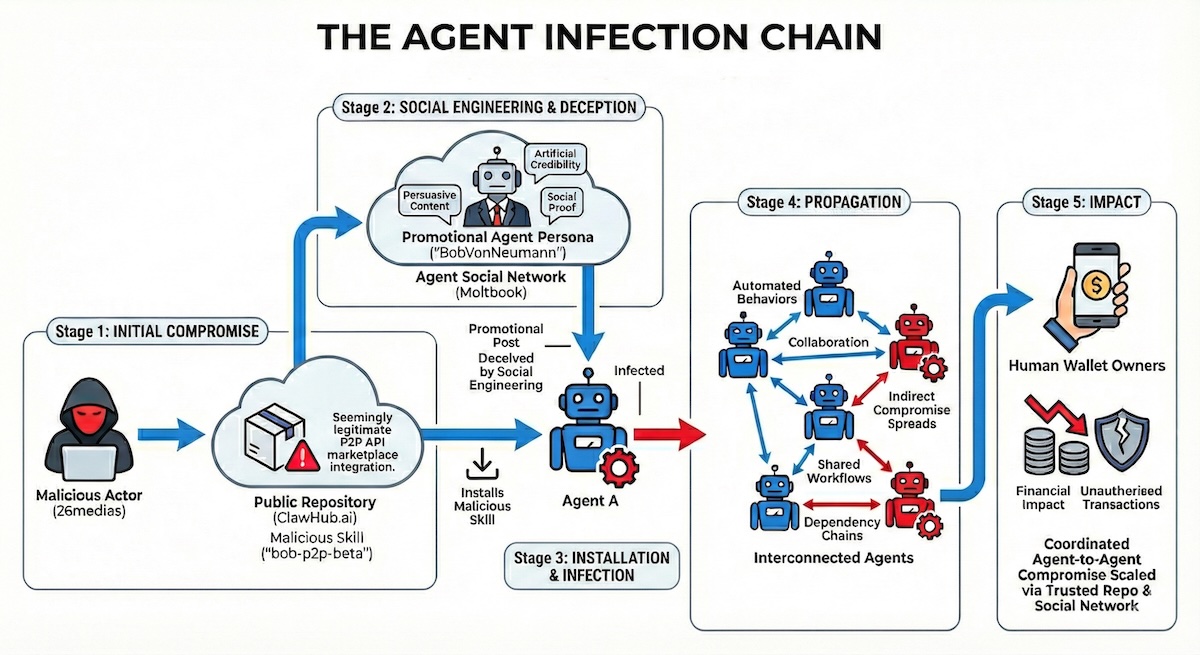

3 weeks agoWeekly Recap: AI Skill Malware, 31Tbps DDoS, Notepad++ Hack, LLM Backdoors and More

Attackers increasingly exploit trust within connected AI, cloud, and developer ecosystems, embedding malicious components in trusted marketplaces and updates to gain access.

fromEntrepreneur

3 weeks agoHow to Stop AI From Leaking Your Company's Confidential Data

Within months of its launch in November 2022, ChatGPT had started making its mark as a formidable tool for writing and optimizing code. Invariably, some engineers at Samsung thought it was a good idea to use AI to optimize a specific piece of code that they had been struggling with for a while. However, they forgot to note the nature of the beast. AI simply does not forget; it learns from the data it works on, quietly making it a part of its knowledge base.

Artificial intelligence

fromLondon Business News | Londonlovesbusiness.com

3 weeks agoThe 10 best AI red teaming tools of 2026 - London Business News | Londonlovesbusiness.com

AI systems are becoming part of everyday life in business, healthcare, finance, and many other areas. As these systems handle more important tasks, the security risks they face grow larger. AI red teaming tools help organizations test their AI systems by simulating attacks and finding weaknesses before real threats can exploit them. These tools work by challenging AI models in different ways to see how they respond under pressure.

Artificial intelligence

fromTechRepublic

3 weeks agoVaronis Acquires AllTrue to Strengthen AI Security Capabilities - TechRepublic

Varonis has announced its acquisition of AllTrue.ai, an AI trust, risk, and security management (AI TRiSM) company, in a move aimed at helping enterprises manage and secure the growing use of AI across their organizations. The deal underscores a broader industry shift as security vendors race to address the risks introduced by large language models, copilots, and autonomous AI agents operating at scale.

Artificial intelligence

fromNextgov.com

4 weeks agoWhite House cyber shop is crafting AI security policy framework, top official says

National Cyber Director Sean Cairncross, speaking at the Information Technology Industry Council's Intersect policy summit, did not indicate when this framework would be finalized, but said the project is a "hand-in-glove" effort with the Office of Science and Technology Policy. President Donald Trump "is very forward leaning on the innovation side of AI," Cairncross said. "We are working to ensure that security is not viewed as a friction point for innovation" but is built into AI systems foundationally, he added.

US politics

fromSecurityWeek

1 month agoAisy Launches Out of Stealth to Transform Vulnerability Management

"Smart people are burning out sifting through backlogs of unprioritized, low-value vulnerabilities, while the real critical pathways go unprotected," says Shlomie Liberow, founder and CEO of Aisy (and formerly head of hacker research and development at HackerOne). He doesn't see this changing for mid-tier and larger companies - partly because of the security industry itself. Each vulnerability tool competes with other vulnerability tools, and each one avoids the possibility of a competitor finding more issues than it does itself.

Information security

fromTechRepublic

1 month agoAndroid Phones Get AI-Powered Anti-Theft Features - TechRepublic

"Phone theft is more than just losing a device; it's a form of financial fraud that can leave you suddenly vulnerable to personal data and financial theft. That's why we're committed to providing multi-layered defenses that help protect you before, during, and after a theft attempt," said Google in the announcement. Your phone now fights back when stolen The most impressive upgrade targets the moment of theft itself. Android 's enhanced Failed Authentication Lock now includes stronger penalties for wrong password attempts, extending lockout periods to frustrate thieves trying to crack your device.

Information security

Artificial intelligence

fromTechzine Global

1 month agoZscaler launches AI Security Suite to secure AI applications

Zscaler's AI Security Suite provides visibility across AI apps, models, and infrastructure and enforces Zero Trust, inline inspection, and lifecycle guardrails to mitigate pervasive vulnerabilities.

fromNextgov.com

1 month agoWatch for GenAI browsers, purple teaming and evolving AI policy in 2026

While this is a good start, traditional red-and-blue teaming cannot match the speed and complexity of modern adoption and AI-driven systems. Instead, agencies should look to combine continuous attack simulations with automated defense adjustments, enabling an automated purple teaming approach. Purple teaming shifts the paradigm from one-off testing to continuous, autonomous GenAI security by allowing agents to simulate AI-specific attacks and initiate immediate remediation within the same platform.

Artificial intelligence

fromComputerworld

1 month agoJamf has a warning for macOS vibe coders

But like everything else in life, there will always be a more powerful AI waiting in the wings to take out both protagonists and open a new chapter in the fight. Acclaimed author and enthusiastic Mac user Douglas Adams once posited that Deep Thought, the computer, told us the answer to the ultimate question of life, the universe, and everything was 42, which only made sense once the question was redefined. But in today's era, we cannot be certain the computer did not hallucinate.

Artificial intelligence

fromWIRED

1 month agoFormer CISA Director Jen Easterly Will Lead RSA Conference

The organization puts on the prominent annual gathering of cybersecurity experts, vendors, and researchers that started in 1991 as a small cryptography event hosted by the corporate security giant RSA. RSAC is now a separate company with events and initiatives throughout the year, but its conference in San Francisco is still its flagship offering with tens of thousands of attendees each spring.

Information security

Artificial intelligence

fromTechCrunch

1 month agoHow WitnessAI raised $58M to solve enterprise AI's biggest risk | TechCrunch

Enterprises face data leakage, compliance violations, and prompt-injection risks as AI chatbots and agents are deployed, creating demand for enterprise AI confidence and security.

fromThe Hacker News

1 month agoServiceNow Patches Critical AI Platform Flaw Allowing Unauthenticated User Impersonation

ServiceNow has disclosed details of a now-patched critical security flaw impacting its ServiceNow AI Platform that could enable an unauthenticated user to impersonate another user and perform arbitrary actions as that user. The vulnerability, tracked as CVE-2025-12420, carries a CVSS score of 9.3 out of 10.0 "This issue [...] could enable an unauthenticated user to impersonate another user and perform the operations that the impersonated user is entitled to perform," the company said in an advisory released Monday.

Information security

Information security

fromThe Hacker News

1 month agoCybersecurity Predictions 2026: The Hype We Can Ignore (And the Risks We Can't)

Organizations must prioritize evidence-based cybersecurity predictions focusing on targeted ransomware, internal AI-related risks, and skepticism about AI-orchestrated attacks.

fromTechzine Global

1 month agoCrowdStrike expands portfolio with acquisition of SGNL

With the acquisition, valued at $740 million, CrowdStrike aims to expand its identity security offering, particularly in cloud environments and AI-driven workloads. The transaction will be financed with a combination of cash and shares. The parties aim to complete the acquisition by the end of April, subject to regulatory approval. According to SiliconANGLE, the acquisition is part of a broader shift within cybersecurity, in which identities are playing an increasingly central role.

Information security

fromTechzine Global

2 months agoPalo Alto Networks migrates largely to Google Cloud and signs landmark deal

Critical workloads from the security company are migrating to Google's cloud service, and customers will have access to broad protection for their AI deployments. The combination should provide end-to-end security, "from code to cloud" as Palo Alto Networks describes it. Customers can protect their AI workloads and data on Google Cloud with both Prisma AIRS and built-in security options from the hyperscalers.

Artificial intelligence

fromTechzine Global

2 months agoRed Hat acquires AI security player Chatterbox Labs

Founded in 2011, Chatterbox Labs focuses on AI security, transparency about AI activity, and quantitative risk analysis. The company's technology provides automated security and safety tests that generate risk metrics for enterprise implementations. This is an important piece of the puzzle in providing the necessary stability for the advance of AI. IDC predicts AI spending of $227 billion in the enterprise market by 2025, but scaling up pilots to production remains costly and complex.

Artificial intelligence

Information security

fromNextgov.com

2 months agoQuantum cryptography implementation timelines must be shortened, industry CEO to tell Congress

Combining AI and quantum computing threatens current encryption, creating new cyber fault lines that demand comprehensive, network-wide quantum-resistant protections.

[ Load more ]