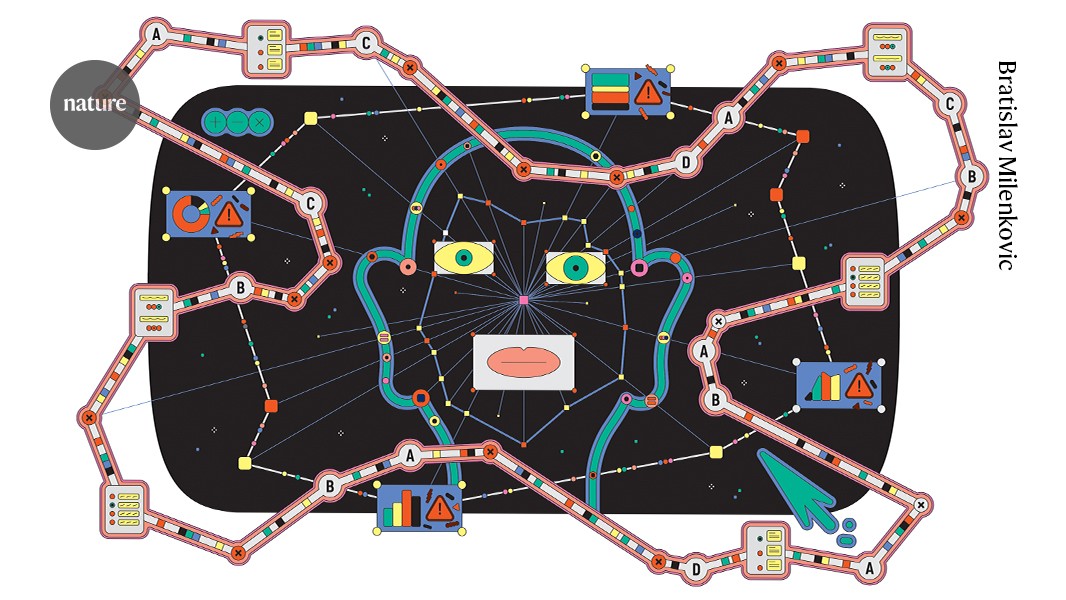

"This problem is exacerbated in studies targeting specialized populations or marginalized communities because the intended participants are harder to reach and are often recruited online, raising the risk of fraud and interference by automated programs called bots, . Those percentages are much higher than the amount of fraudulent responses most studies can cope with, if they are to produce results that are statistically valid: even 3-7% of polluted data can distort results, rendering interpretations inaccurate."

"The use of artificial intelligence for crafting responses is on the rise, too. For instance, answers mediated by large language models (LLMs) accounted for up to 45% of submissions in one 2025 study. The advent of AI agents that can autonomously interact with websites is set to escalate the problem, because such agents make the production of authentic-looking survey responses trivially easy, even for people without coding experience."

Online recruitment platforms such as Mechanical Turk, Prolific, Prime Panels and Lucid enable rapid, low-cost survey participation but face high rates of inauthentic responses. Between 30% and 90% of survey submissions can be fraudulent or inauthentic, and even 3–7% polluted data can distort statistical results. Fraud risk rises for specialized or marginalized populations and is worsened by scripts, bots, tutorials and AI use; one 2025 study found up to 45% of submissions mediated by large language models. Emerging autonomous AI agents will further lower barriers to producing convincing fake responses, escalating threat to data validity.

Read at Nature

Unable to calculate read time

Collection

[

|

...

]