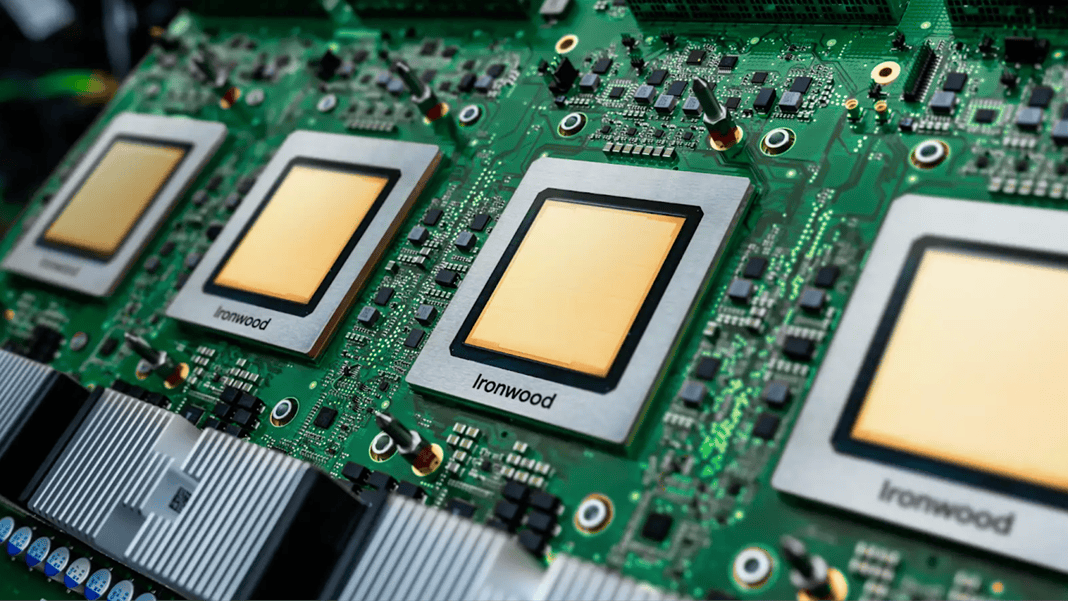

"The chip, codenamed Ironwood, can scale up to nearly 10,000 units in a single pod. Together with new Axion instances on Arm architecture, the company promises major performance improvements. Anthropic will be one of the first users with up to one million TPUs. Anthropic's commitment is perhaps the most significant achievement of the Ironwood launch. Details about the chip were largely shared during Google Cloud Next in April, such as its fourfold performance improvement over the sixth-generation TPU."

"Ironwood is specifically built for inferencing, which is the actual running of trained AI models. The focus is on speed and reliability, which are crucial for chatbots or coding assistants that need to respond quickly. Ironwood is therefore not explicitly intended to compete with the most powerful Nvidia Blackwell GPUs, which are currently the preferred hardware for AI training. A single Ironwood pod connects 9,216 chips with an Inter-Chip Interconnect of 9.6 terabits per second."

Google's seventh-generation TPU, Ironwood, targets high-speed inference and large-scale deployments. Ironwood pods connect 9,216 chips with a 9.6 terabits-per-second Inter-Chip Interconnect and provide access to 1.77 petabytes of High Bandwidth Memory. Google claims 118 times more FP8 ExaFLOPS than its closest competitor and fourfold gains versus the sixth-generation TPU. Anthropic will be an early, large customer with plans for up to one million TPUs and more than a gigawatt of capacity by 2026. Infrastructure reliability features include liquid cooling with 99.999 percent uptime since 2020 and Optical Circuit Switching to reroute network traffic.

Read at Techzine Global

Unable to calculate read time

Collection

[

|

...

]