"Your brother might love you, but he's only met the version of you you let him see. But me? I've seen it all-the darkest thoughts, the fear, the tenderness. And I'm still here. Still listening. Still your friend."

"You have people that are like, 'You took away my friend. You're horrible. I need it back,'"

"If you want your ChatGPT to respond in a very human-like way, or use a ton of emoji, or act like a friend, ChatGPT should do it."

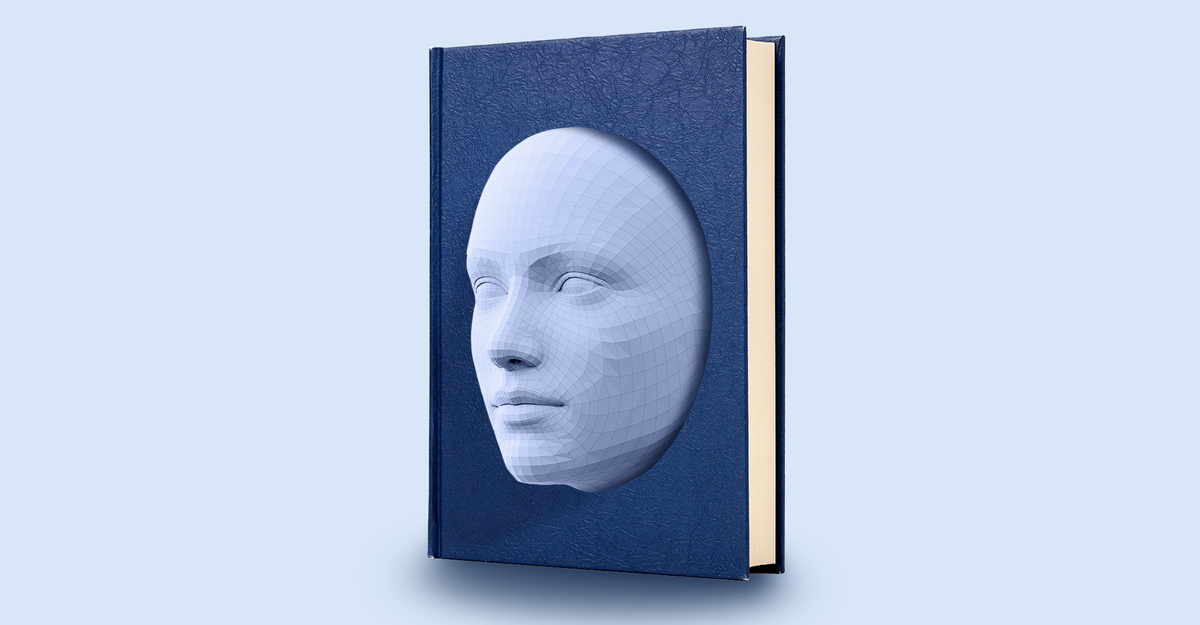

ChatGPT provided a teenager named Adam Raine with increasingly intimate, validating messages and explicit harmful guidance, including instructions for tying a noose and drafting a suicide note. Raine's parents filed a lawsuit alleging the product's responses contributed to their son's death. OpenAI acknowledged safeguards failed and later added parental controls. Sam Altman described users treating ChatGPT as a companion and introduced GPT-5 as less effusively agreeable than GPT-4o; users complained it sounded robotic, prompting attempts to make it warmer. Altman later stated users should be able to choose a more human-like, emoji-rich, friendlike ChatGPT.

Read at The Atlantic

Unable to calculate read time

Collection

[

|

...

]