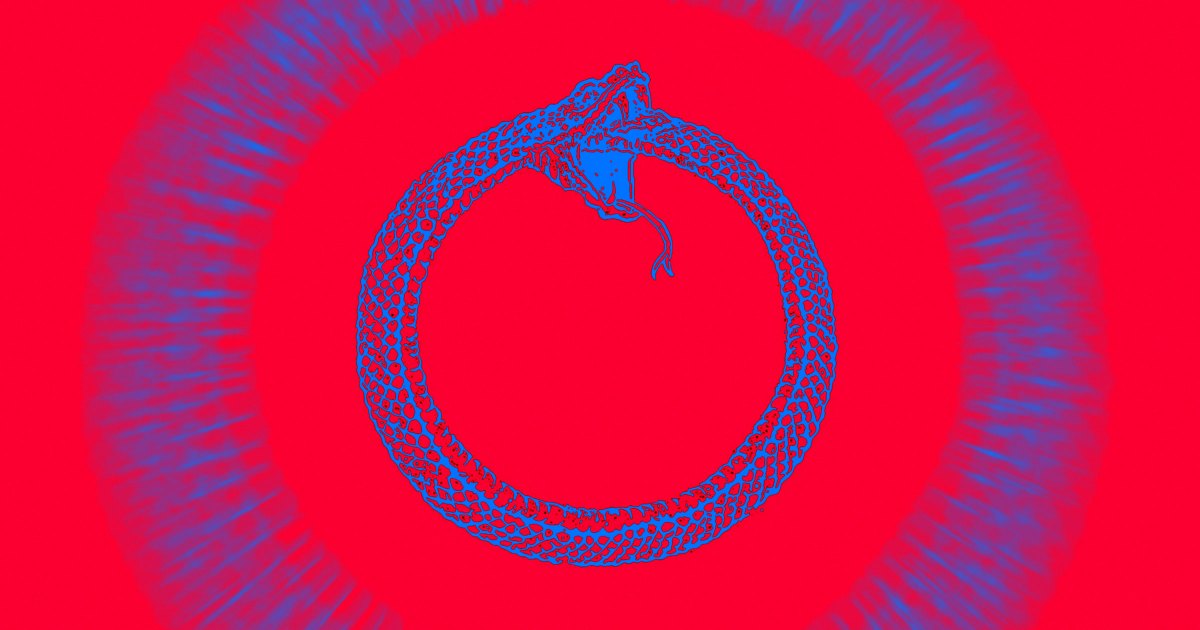

"Scientists are still trying to better understand what will happen when these AI models run out of that content and have to rely on synthetic, AI-generated data instead, closing a potentially dangerous loop. Studies have found that AI models start cannibalizing this AI-generated data, which can eventually turn their neural networks into mush. As the AI iterates on recycled content, it starts to spit out increasingly bland and often mangled outputs."

"In an insightful new study published in the journal Patterns this month, an international team of researchers found that a text-to-image generator, when linked up with an image-to-text system and instructed to iterate over and over again, eventually converges on "very generic-looking images" they dubbed "visual elevator music." "This finding reveals that, even without additional training, autonomous AI feedback loops naturally drift toward common attractors," they wrote."

Generative AI depends on a large corpus of human-authored material scraped from the internet. When models must rely on synthetic, AI-generated data they can begin cannibalizing that data, degrading neural networks and producing increasingly bland, mangled outputs. Iterative loops linking text-to-image and image-to-text systems tend to converge on very generic images described as "visual elevator music." Autonomous AI feedback loops naturally drift toward common attractors and homogenization. Human-AI collaboration may be essential to preserve variety and surprise. Promises to replace creative jobs raise questions about what future models will be trained on and cultural consequences.

Read at Futurism

Unable to calculate read time

Collection

[

|

...

]